We’ve implemented a bunch of animations! Our doll now zips around and feels alive. The animation system is not quite done yet, but as a change of pace I’m going to focus now on terrain collisions.

My concept for THRUST//DOLL has the character travelling through a level made of organic shapes, like a womb or a cave. Mesh/terrain colliders do not yet exist in Latios Framework, so if I wanted to implement collisions with walls, I’d have to do split all the parts of the terrain into convex chunks.

Approach 1: 700 convex colliders

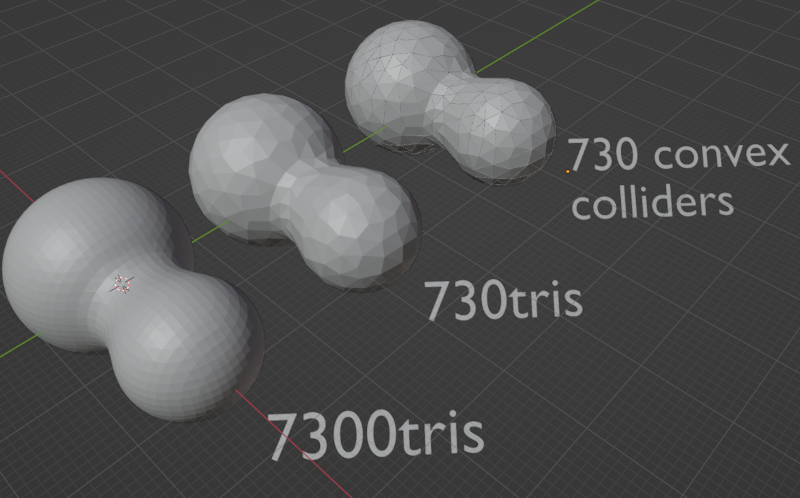

I experimented a little with doing this in Blender. I’d create the level using metaballs, and then convert them to the mesh, which samples the implicit surface using the Marching Cubes algorithm or something similar. To get a nice smooth surface, you need to set the resolution fairly high; however I think too many colliders would kill the system, even if they’re static.

So, to create colliders, I could decimate this surface. To get convex colliders, I could then apply the ‘edge split’ and ‘solidify’ modifiers to essentially generate one collider per face. (I could also use triangle colliders, but I’d have to set up a custom authoring for that.)

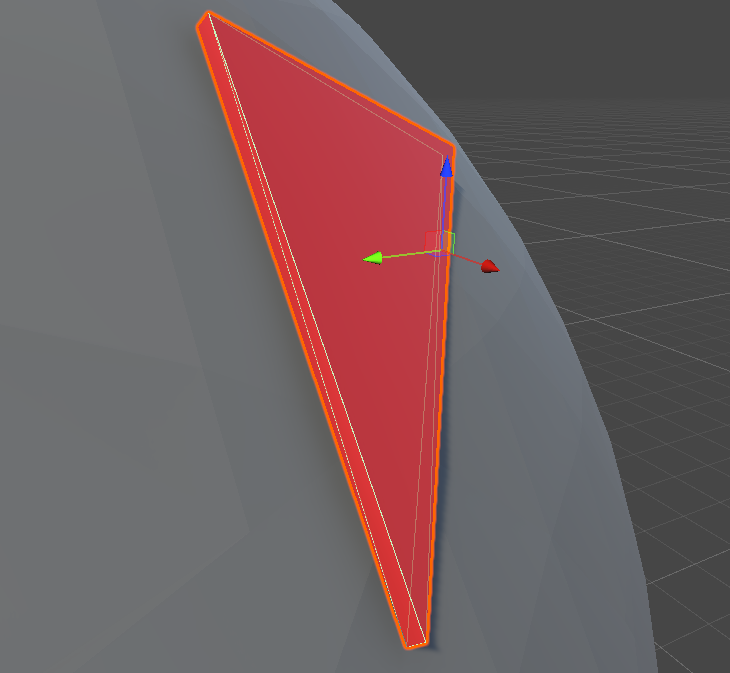

Here’s an example of how this workflow would look. I’m going to call this thing the ‘peanut’.

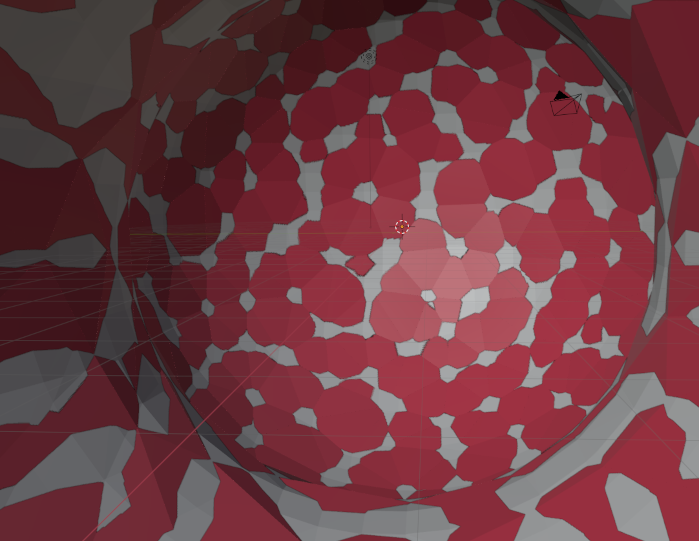

Viewed from the inside, the colliders (red) are sometimes inside, sometimes outside the higher-res mesh. So the resulting collisions may sometimes clip or have a gap. We’ll see if that’s a real problem.

This approach has advantages and drawbacks. The big advantage is that we can take advantage of Psyshock’s spatial acceleration structures. So it won’t matter that all these colliders are convex mesh colliders, since most of them will get dismissed by the broadphase structure.

The disadvantage is that importing it into Unity will create hundreds or thousands of distinct meshes and GameObjects, and I need to find some way to tag these and then bake them into entities which can be used to generate a collision layer. If I make a change to the level, I will have to regenerate this structure and reimport it, which sounds like a pain.

Let’s test for the sake of argument how this might work. I’ll take this ‘peanut’ shape, and put it in Unity, and build a collision layer.

Getting Unity to eat this peanut

To get a convex mesh collider for each piece… I could go through and add the Mesh Collider manually component to every single GameObject. However, for a few hundred objects, that just isn’t practical! So we will need to create the colliders in code.

How would this be done? A ConvexCollider in Latios stores the actual collider on a blob component. If I’m not applying the Mesh Collider component on the game objects, this would need to be generated using a smart blobber.

Alternatively, as a prep step, I could create a MenuItem function on a MonoBehaviour which does the job. That would look like this…

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

using UnityEditor;

public class AddCollidersMenuItem : MonoBehaviour

{

[MenuItem( "Utils/Add convex colliders and remove renderers" )]

static void AddConvexCollidersAndRemoveRenderers()

{

foreach( GameObject go in Selection.gameObjects )

{

MeshCollider m = go.AddComponent<MeshCollider>();

m.convex = true;

Object.DestroyImmediate( go.GetComponent<MeshRenderer>());

}

}

}

This works. You don’t have to attach this MonoBehaviour to anything; the script existing is enough to cause the menu to appear.

Applying it is a little fiddly: you need to select the parent object of the prefab, right click, select its children, unselect the parent, and then click the menu item. It does not affect the undo history—I’m not sure how to add a whole set of operations as one ‘undo’ item, and I don’t want to flood the undo history with some 1400 actions.

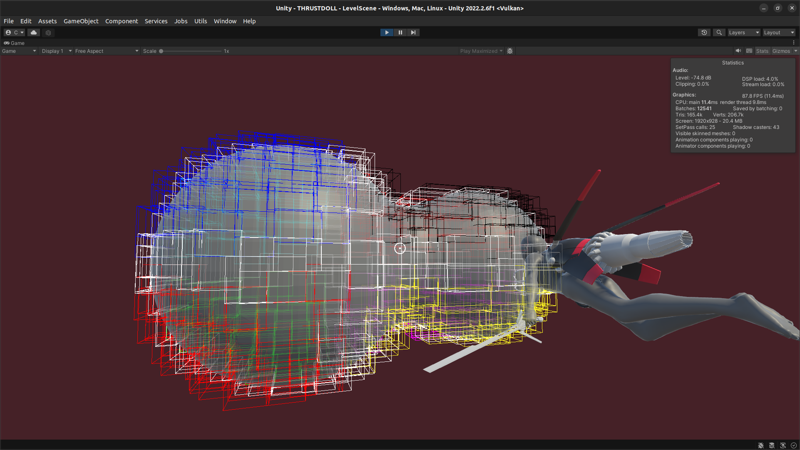

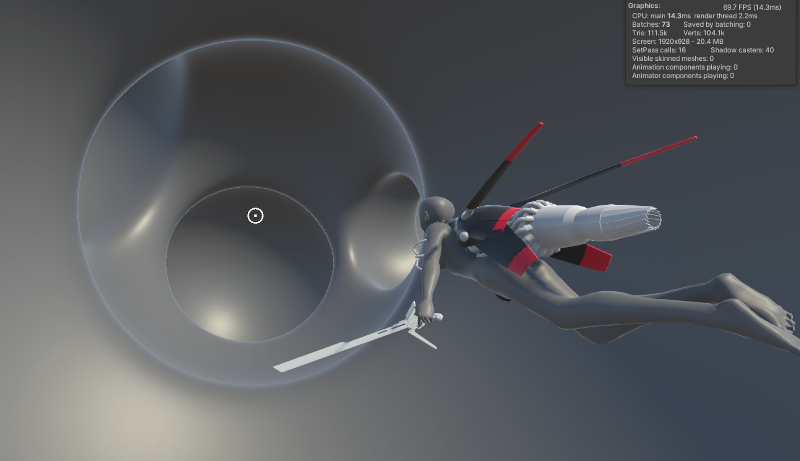

The result of generating some 700 colliders using this method.

I wondered whether baking all 700 colliders would be a problem, but it actually doesn’t cause any noticeable delay. I did get a couple of errors thrown about a null object, but only two errors for 700 colliders so I’m not quite sure what’s causing that. We’ll find out if it’s a real problem in a minute.

Now we need to be able to select all of these new colliders with an EntityQuery in order to create a collision layer. A simple tag component will do the trick, which can be added to all the GameObjects in the same way. With this done, we can build the collision layer. There’s no need to build it on every frame, since all the colliders are totally static.

Allergic reaction

That thing I said “we’ll find out if it’s a real problem in a minute”? Yeah, it was a real problem. Turns out I’ve found another bug in the baking system. To make a long story short, a loop index wasn’t being incremented in the ConvexColliderSmartBlobberSystem. The result was that all the meshes to process into colliders were getting written to the first index of an array.

But don’t worry, I’m totally capable of making my own mistakes!

That solved the baking issue, but getting collisions with convex meshes turned out to have its own puzzles. Testing using a cube with a convex mesh collider produced the expected broadphase AABB collisions, but then the narrow-phase test using DistanceBetween failed.

I switched over to testing for collisions using ColliderCast instead, and did manage to collide with the cube. But when I introduced the ‘peanut’, I started getting spurious collisions on every frame. I carried out a manual binary search, deleting half the colliders at a time until I could zero in on the problem one.

This was not helped by Unity repeatedly, unpredictably crashing when I started and stopped the player. Every time Unity crashes I have to wait for the Burst compiler to recompile all the scripts, which takes a couple of minutes. Not sure why it can’t cache this. Moreover, any changes I made to the scene are undone if I didn’t save. So I need to be constantly saving before any run of the player. I filed a bug report but I doubt it will go anywhere.

…my next project, I am absolutely switching to Bevy. (Then I can complain about Bevy!)

Anyway, eventually I narrowed down one of the problem colliders to Collider.48. Naughty Collider.48! But it wasn’t actually Collider.48’s fault—rather, I was entering my collider cast wrong.

Here’s the type signature of the collider cast:

public static bool ColliderCast(in Collider colliderToCast,

in RigidTransform castStart,

float3 castEnd,

in CollisionLayer layer,

out ColliderCastResult result,

out LayerBodyInfo layerBodyInfo)

For some reason, I interpreted castEnd, despite obviously being called “cast end”, as instead being something like “cast vector”, relative to the current position. I guess I imagined I might at some point put the player velocity in there? Well, I passed \((0,0,0)\) to this, which meant I’m testing for collisions anywhere on a line between the current position and the world origin. Haha whoops. I’m very glad I didn’t end up trying to report that to Dreaming as a bug, but still, egg on my face.

If I want to simply test for collisions in the current location, instead of zero, I need to just pass that same location twice.

Now, you might also be wondering what a RigidTransform is. I know I did, and searched through the framework to no avail. The answer is actually… it’s part of the Unity.Mathematics library. Once I found that, writing the cast was pretty straightforward, it’s like this:

[WithAll(typeof(Character))]

partial struct PlayerColliderCastJob : IJobEntity

{

public CollisionLayer TargetLayer;

void Execute

( in Collider collider

, in Rotation rotation

, in Translation translation

)

{

RigidTransform transform =

new RigidTransform

( rotation.Value

, translation.Value

);

if

( Physics

.ColliderCast

( collider

, transform

, translation.Value

, TargetLayer

, out ColliderCastResult result

, out LayerBodyInfo layerBodyInfo

)

)

{

UnityEngine.Debug.Log("Player hit a wall");

}

}

}

Except… that doesn’t work. The job runs, but no collisions are detected. I can only assume that a collider cast for a non-moving collider, where the start and end points are the same, doesn’t actually count? Let’s try adding an epsilon to it and see if that changes anything… it does not.

But, what does work is if I subtract the velocity from the initial position, making it so that the cast covers the volume swept out between one frame and the next.

I think I understand collider casts better now. Essentially it raycasts from each point on the collider’s starting position to its ending position. It doesn’t test for collisions at the start and end point (correction: it actually does, but it uses DistanceBetween, which we’ve established doesn’t work). So if you make the start and end points too close together, you won’t find any collisions.

That may be enough, especially for situations where we want to detect if you flew into a wall. We could even use it in the narrow phase of FindPairs to collide bullets with the walls.

So, thinking I’d finally found a way that would work I eagerly put the ‘peanut’ with its 700 colliders back in the level. No spurious collisions, good… I flew the doll into the ‘peanut’ and immediately got an out of bounds error thrown from the deep internals of the collision detection function.

Sniffing out a bug

I gotta say though I feel for Dreaming. This function PointConvexDistance is doing some truly heavy duty maths that makes all of the quaternion wrangling I was playing with last week look like nothing. And then people like me come along and poke and probe at it and come running back like “it’s not wooooorking” with some sort of weird edge case from a tiny oversight.

Let’s see if I can be a bit more helpful than that!

So, let’s take a look at PointDistance.Convex.cs. This tests whether a point is within a threshold distance of a convex collider. Here are the relevant lines:

int bestPlaneIndex = 0;

float3 scaledPoint = point * invScale;

for (int i = 0; i < blob.facePlaneX.Length; i++)

{

float dot = scaledPoint.x * blob.facePlaneX[i] + scaledPoint.y * blob.facePlaneY[i] + scaledPoint.z * blob.facePlaneZ[i] + blob.facePlaneDist[i];

if (dot > maxSignedDistance)

{

maxSignedDistance = dot;

bestPlaneIndex = i;

}

}

var localNormal = new float3(blob.facePlaneX[bestPlaneIndex], blob.facePlaneY[bestPlaneIndex], blob.facePlaneZ[bestPlaneIndex]);

The problem seems to come from the blob.facePlaneX (Y, Z) arrays being empty. If the array is empty, then the for loop gets skipped, and bestPlaneIndex remains at 0. Then, when used to index the blob arrays, even zero is out of bounds.

This definitely seems like something that isn’t supposed to be possible. It suggests we’re testing against a convex collider that inexplicably has zero faces.

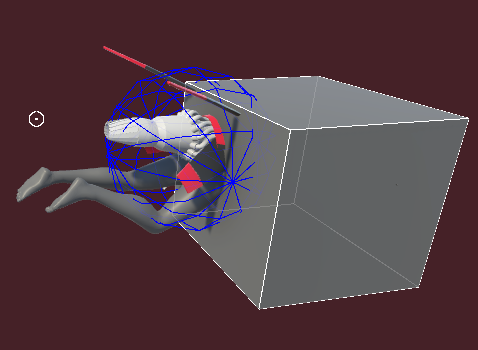

Incidentally, it is possible to visualise the collision layer using the debug functions. This results in quite a pretty breakdown of the ‘peanut’ into its AABBs…

We can see from this that all the AABBs seem to be the right size, so it seems the meshes are baking correctly, and information about them is making it into Kinemation. So what’s happening here?

We can try to diagnose this problem by inserting a couple of debug statements. For example:

UnityEngine.Debug.LogFormat

( "{0}: facePlaneX.Length: {1}, Y: {2}, Z: {3}, Dist: {4}"

, blob.meshName

, blob.facePlaneX.Length

, blob.facePlaneY.Length

, blob.facePlaneZ.Length

, blob.facePlaneDist.Length

);

Such a statement can’t be bursted, but the program still runs, and outputs to the console. Sure enough, for certain colliders, the facePlaneX, Y, Z and Dist arrays are all empty, and these are the ones that throw the errors.

Let’s go back to the baker then. facePlaneX etc. are assigned starting on line 277 of ConvexColliderSmartBlobberSystem (line 278 after the modification I made to fix the previous bug). It’s possible that edgeIndicesInFacesStartsAndCounts.Length could be zero. So let’s add a debug statement just above there to check.

if(edgeIndicesInFacesStartsAndCounts.Length == 0)

{

UnityEngine.Debug.LogFormat("eIIFSAC was zero in mesh: {0}", meshName);

}

Sure enough, this reveals that for certain meshes, eIIFSAC is zero length. OK, let’s trace the story backwards.

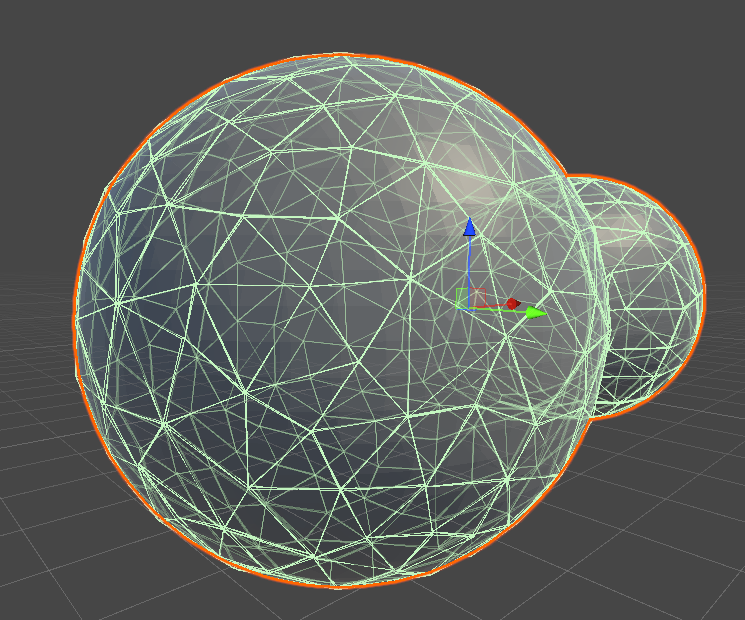

I had a look at the suspect meshes to see if anything looked off about them. The convex collider does seem to be merging certain vertices at the corners, turning a triangular prism in the render mesh into a tetrahedron in the collider. However, it’s also doing this on colliders that don’t have an obvious problem.

However, this is how the old Unity Engine collider algorithm builds a collider. Perhaps Latios is diverging in how it applies the algorithm?

The eIIFSAC array is assigned based on the numFaces variable of the ConvexHullBuilder. The ConvexHullBuilder is built on line 192. The definition for it is here; it’s derived from Unity.Physics, modified slightly for compatibility with Latios.

So let’s log whether that’s zero as well. No zeroes come up! In that case, let’s try logging all the cases where edgeIndicesInFacesStartsAndCounts is zero. The result is that all of the ‘problem’ colliders have exactly 2 faces! Ahaha, that seems sus. I think we’re getting close.

I did some further probing. When the vertices are deduplicated, there are 6. The number of vertices reported by the ConvexHullBuilder varies between 4, 5 and 6 on all the problem examples. The only thing that seems off is the faces being 2.

I spoke with Dreaming at this point. The culprit seems to be the ConvexHullBuilder. In the case where the mesh gets simplified down to two faces, the iterator over faces, GetFirstFace(0), returns invalid. This breaks the assumptions of Latios, which expects to always get a valid iterator.

I couldn’t claim to be able to parse the ConvexHullBuilder yet, but at least this points towards a workaround: we need to stop the vertices getting merged when the convex hull is built. In Blender, I can increase the thickness in the ‘solidify’ modifier when I generate the colliders. This results in no broken convex hulls. (Dreaming’s plan is to special-case these convex hulls.)

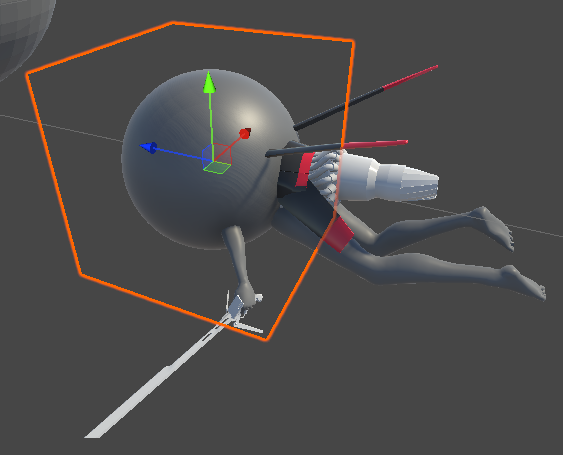

Sniffing out another bug

Having now got functional colliders, we need to solve another problem: there is a bug in the DistanceBetween function for convex colliders that leads it to return an incorrectly large distance. E.g. I’d have the two colliders intersect, or even completely overlap, and end up with a distance between 1 and 2. This happens when colliding with capsules, cubes, spheres… so it seems to be a fairly general problem!

This situation reports a distance of 1.68.

Let’s see if we can scrutinise the code for detecting collisions. Most of the DistanceBetween functions for convex colliders wrap a function called DistanceBetweenGjk. Here’s its code:

private static ColliderDistanceResult DistanceBetweenGjk(in Collider colliderA, in RigidTransform aTransform, in Collider colliderB, in RigidTransform bTransform)

{

var bInATransform = math.mul(math.inverse(aTransform), bTransform);

var gjkResult = SpatialInternal.DoGjkEpa(colliderA, colliderB, bInATransform);

var epsilon = gjkResult.normalizedOriginToClosestCsoPoint * math.select(1e-4f, -1e-4f, gjkResult.distance < 0f);

DistanceBetween(gjkResult.hitpointOnAInASpace + epsilon, in colliderA, RigidTransform.identity, float.MaxValue, out var closestOnA);

DistanceBetween(gjkResult.hitpointOnBInASpace - epsilon, in colliderB, in bInATransform, float.MaxValue, out var closestOnB);

return BinAResultToWorld(new SpatialInternal.ColliderDistanceResultInternal

{

distance = gjkResult.distance,

hitpointA = gjkResult.hitpointOnAInASpace,

hitpointB = gjkResult.hitpointOnBInASpace,

normalA = closestOnA.normal,

normalB = closestOnB.normal

}, aTransform);

}

Given two colliders, this transforms the second collider into the local space of the first collider, then passes it to the GJK/EPA algorithm.

Here, GJK refers to the Gilbert-Johnson-Keerthi distance algorithm, tutorialised here. EPA refers to the Expanding Polytope Algorithm, which extends the GJK algorithm to find the normal.

I can’t possibly explain GJK better than this video from the above link:

Right now, I don’t need to worry about EPA, since I’m concerned primarily with distances. The value distance emerges from SpatialInternal.DoGjkEpa. The rest of the lines in ColliderDistanceResult are to do with locating hit points and normals, which would be used to process a collision.

I would really like to understand how the GJK algorithm works well enough to track down the source of the error here, but with just over a week left to create some sort of prototype for my game that I can show to people, I don’t think I can afford the time right now. I am working with Dreaming to solve it—he’s actually gone to the effort of cooking up a query serializer. Instead, I’m going to have to make a decision.

The verdict

This method is viable. Thanks to the AABB broadphase structure provided by Latios, and since the collision layer is static, it’s not even noticeably slow.

The behaviour I’m planning to build is that the player should either attach to, or bounce off walls. Both of these behaviours would depend on the collision normal. One wrinkle I didn’t consider with this approach is that usually, if the player collides with a wall, I can potentially register collisions with multiple colliders. In this case, I would have multiple normals to worry about, and have to select one by some means. Not an unsolvable problem.

Still, I think it’s time to consider the alternative approach and see what advantages that brings.

Approach 2: Signed Distance Fields

I created the ‘peanut’ shape using metaballs. Essentially this has each ‘ball’ as a source of an inverse-square scalar field, which is summed, and then compared to a threshold value and converted to a triangle mesh with Marching Cubes. This is one type of implicit surface.

There is another way to render implicit surfaces which skips the ‘convert to mesh’ step entirely. A Distance Field is a function which tells you the distance to the nearest surface. A Signed Distance Field (SDF) is positive when you’re outside the closed surface, and negative when you’re inside. The expert in all matters relating to SDFs is Inigo Quilez, co-founder of ShaderToy. On his site is a lot of useful information regarding raymarching and SDFs, notably the SDFs for a large number of geometric primitives, along with visual demonstrations of what’s possible.

So. SDFs can be rendered using a technique called raymarching. This is a form of raytracing, commonly used in rendering things like clouds in modern games, but it can also be used for rendering hard surfaces. Compared to ‘standard’ raytracing, which compares a ray against all the triangles in a scene (with some acceleration structure to speed it up), raymarching ‘walks’ the ray step by step, using the signed distance field. The idea is that, with each step, the SDF tells you how far you can advance the ray and remain guaranteed not to hit the surface. You walk until you either get ‘close enough’ to the surface or reach a predefined max distance/number of steps.

To use raymarching for rendering, I’d essentially have to add a whole extra render pipeline alongside the standard rendering. We’d render the scene to a buffer with depth, render the raymarched stuff, and then combine them using depth comparison.

A quick search finds a few implementations of Raymarching in Unity. Many are experimental. The most promising I’ve found is uRayMarching by hecomi, which given a signed distance field, generators a shader to match. So let’s see about installing it.

It’s pretty easy to install and generate a test shader for a cube, which does indeed display a sphere inside it using raymarching!

It even gets accurate intersections with triangle meshes and receives shadows!

Unfortunately, when I hit play and try to see how well this works in-game, it doesn’t work. The error has to do with the SRP batcher, which this raymarching shader is not yet compatible with. In Entities Graphics, all rendering is done through the SRP batcher.

I can solve this problem by removing this object from the ECS. Once I do this, everything renders correctly. The performance even seems fine, although the amount of the screen covered by the raymarching shader is pretty small right now.

Generating a nice SDF

Ideally, I’d be able to place objects in the scene, and then read their properties to generate the SDF in a similar way to building a level with metaballs. This will make authoring much easier. And this is actually possible; there is an ‘interactive metaballs’ example in the examples.

The SDF used is this:

// These inverse transform matrices are provided

// from TransformProvider script

float4x4 _Cube;

float4x4 _Sphere;

float4x4 _Torus;

float4x4 _Plane;

float _Smooth;

inline float DistanceFunction(float3 wpos)

{

float4 cPos = mul(_Cube, float4(wpos, 1.0));

float4 sPos = mul(_Sphere, float4(wpos, 1.0));

float4 tPos = mul(_Torus, float4(wpos, 1.0));

float4 pPos = mul(_Plane, float4(wpos, 1.0));

float s = Sphere(sPos, 0.5);

float c = Box(cPos, 0.5);

float t = Torus(tPos, float2(0.5, 0.2));

float p = Plane(pPos, float3(0, 1, 0));

float sc = SmoothMin(s, c, _Smooth);

float tp = SmoothMin(t, p, _Smooth);

return SmoothMin(sc, tp, _Smooth);

}

The TransformProvider script reads matrices from gameobjects and copies them onto the material properties.

using UnityEngine;

[ExecuteInEditMode]

public class TransformProvider : MonoBehaviour

{

[System.Serializable]

public class NameTransformPair

{

public string name;

public Transform transform;

}

[SerializeField]

NameTransformPair[] pairs;

void Update()

{

var renderer = GetComponent<Renderer>();

if (!renderer) return;

var material = renderer.sharedMaterial;

if (!material) return;

foreach (var pair in pairs)

{

var pos = pair.transform.position;

var rot = pair.transform.rotation;

var mat = Matrix4x4.TRS(pos, rot, Vector3.one);

var invMat = Matrix4x4.Inverse(mat);

material.SetMatrix(pair.name, invMat);

}

}

}

We can, I think, ECS-ify this, although we’ll have to mess with managed components, since the object with the raymarching material has to sit outside the ECS. (In the future after this period of crunch—bc that’s what this is, sigh—I can look into writing my own raymarching shader that’s compatible with the SRP batcher.)

The other question is the raymarching volume. It seems this shader is designed to go on a shape like a cuboid, but ideally we could just raymarch starting from the whole screen. The easiest way to accomplish this is to bolt a quad to the camera and use this with shape=NONE set on the raymarching shader.

There is a convenient ‘raymarching renderer’ component available from uRaymarching, but unfortunately, this does not work with the Universal Render Pipeline. Better would be to do a full screen blit. I tried copying that approach, but unsurprisingly, the shader is not compatible and we don’t see anything.

Now, the quad doesn’t actually have to be closer than the rest of the scene to get decent depth intersections with raymarching. It just needs to fill the whole screen. I think the solution to this may be something like the billboard shader used previously in this project. Except… we can’t use shadergraph this time. So I’d have to edit the vertex shaders used in the raymarching shader. And… they look kind of complex. It would take some work to figure out which bits are essential when all I want to do is get a quad facing the camera.

Thus, for a simple hacky way to do it, I’m just going to use the function that updates the camera position to also move the quad around. The quad functions as kind of a ‘window into the raymarching dimension’. Here’s the updated function:

using Unity.Entities;

using Unity.Transforms;

using Latios;

[UpdateInGroup(typeof(LateSimulationSystemGroup))]

partial class CameraUpdateSystem : SubSystem

{

private UnityEngine.Transform _camera;

private UnityEngine.Transform _raymarchingQuad;

protected override void OnCreate() {

_camera = UnityEngine.Camera.main.transform;

_raymarchingQuad = UnityEngine.GameObject.Find("RaymarchingQuad").transform;

RequireForUpdate<CameraTransform>();

}

protected override void OnUpdate() {

Entity cameraTransform =

SystemAPI

.GetSingletonEntity<CameraTransform>();

LocalToWorld transform =

SystemAPI

.GetComponent<LocalToWorld>(cameraTransform);

_camera.position = transform.Position;

_camera.rotation = transform.Rotation;

_raymarchingQuad.position = transform.Position + transform.Forward;

_raymarchingQuad.rotation = transform.Rotation;

}

}

Sure enough, with this function, the quad acts as a kind of ‘raymarching window’ positioned a metre away from the camera. We can scale it up a bit to fully cover the viewport and get raymarching on every pixel.

It feels hacky but whatever.

Peanut: SDF edition

Now we have an SDF rendering with raymarching, I can get on with editing. This is where I hit another bizarre problem: the field for editing code in this shader gen plugin… doesn’t fucking work. As in, I can move a cursor, select text, etc. but when I try to type, the cursor moves but the text doesn’t change.

Fine! Fine! Whatever. Unity changed something and broke this plugin I guess. I’ll have to directly edit the shader file now that it’s generated. At least this is pretty easy, there’s an obvious marked place where the SDF gets pasted in and another for post processing effects. …and when I do that the version in the Unity editor updates to match. Bizarre. But that’s fine I can work with this.

Various primitives are provided by uRaymarching, so let’s take a look at that first. The primitives provided are:

- Sphere

- RoundBox

- Box

- Torus

- Plane

- Cylinder

- HexagonalPrism

The useful mathematical functions let you repeat a primitive infinitely (one of the clever tricks that raymarching offers), rotate a primitive, twist it, or blend two primitives exponentially, which is the SmoothMin function.

We want to fly around inside the shapes. Creating SDFs for insides rather than outsides presents some complications, as this article of Inigo Quilez discusses. But with spheres we might just be OK…?

Here’s how I think we’d be able to recreate the ‘peanut’, from the inside:

- generate the sdfs of two spheres, make them negative

- smoothmin the result

The code for this idea (inside a much larger shader) looks like so:

// @block Properties

_Smooth("Smooth", Range(0.5,2)) = 1.2

// @endblock

// ...

// @block DistanceFunction

float _Smooth;

inline float DistanceFunction(float3 pos)

{

float3 s1pos = {35, 0, 0};

float3 s2pos = {0, 0, 0};

float s1 = -Sphere(pos - s1pos, 30);

float s2 = -Sphere(pos - s2pos, 20);

return SmoothMin(s1,s2 _Smooth);

}

// @endblock

(n.b. I could also pass the sphere locations and radii as material properties rather than hardcoding them the same way I have the smoothness, but let’s not worry about that for now.)

Now. From outside, if I don’t make the sphere SDFs negative, we can get a reasonable ‘peanut’ shape:

However, rendering from inside presents a problem. If I compute the SDF for just one negative sphere, it does indeed look like we are inside a sphere. But if I try the minimum of two negative spheres, viewed from inside… well, it seems we get the Boolean intersection rather than the Boolean union. A little thought confirms that that is indeed the case: min tells us the distance to the nearest wall. If you’re sitting in the intersection of two spheres, that’s a lens.

Hmm, what about the max? Given the SDFs of two shapes, the max tells you the distance to the nearest point on the further shape. That sounds funky and unhelpful.

It seems that proceding in terms of primitives and simple operations may be the wrong way to think about this problem. Let’s think it through logically.

Suppose we have a SDF consisting of a set of sphere origins. Without any smoothing, we can find which sphere we’re currently inside by measuring the distance to that sphere.

…which is to say we do the minus sign after the min. It’s that simple. Argh.

Inigo’s article explains why this won’t work with general SDFs, but at least with spheres, I believe it will get the correct result. And it works decently well with the smoothmin as well.

Getting data onto the graphics card

Now, we want the ECS to know about the SDF, and be able to compute it internally, so it can be used to test collisions.

(Why do I sometimes write ‘we’ and sometimes write ‘I’? I guess I imagine a reader following alongside me in the quest to make this game, but. I don’t know. Just feels right.)

Ideally, I’d like the shader to be flexible enough to draw an arbitrary set of spheres, each reading their transforms from gameobjects I can organise in the scene to my liking. If I could pass an array of transform matrices and radii to the shader, the function could iterate over these to find the nearest transform to a given point, and the second-nearest, and then smoothmin those two distances.

One way to do this might be the function Shader.SetGlobalMatrixArray. This lets us copy some data onto the graphics card which can be accessed from within shaders.

Since we want these same values available in the ECS, we’ll need to bake these GameObjects into entities. We can possibly kill two birds with one stone by writing a baker that gathers all the relevant transforms, aggregates them into an array, and writes it to the shader global. In fact we could store this same array as a blob asset instead of performing an entity query…

Hehe, using blob assets to store a very blobby level. How fitting.

That said, what if we want the spheres to move around? …a case that’s not worth worrying about at this point.

So, how do we aggregate a bunch of transforms in a baker? The answer seems to be to use a Baking System. A Baking System mostly doesn’t seem to have anything special going on except that it runs during baking and has access to the BlobAssetStore if you inherit from this class.

So if I’m understanding this right, the flow is that you use normal Bakers which run per GameObject, and then in the OnUpdate of the baking system you do something with those entities. Since I’m expecting a level to contain a few dozen spheres at most, I don’t need to worry about jobs and parallelism for this.

Here’s the baker:

using Unity.Entities;

public class MetaballAuthoring : UnityEngine.MonoBehaviour

{

}

public class MetaballBaker : Baker<MetaballAuthoring>

{

public override void Bake(MetaballAuthoring authoring)

{

AddComponent<Metaball>();

}

}

Metaball is just a tag component.

OK, time to make some mistakes. Here is my first implementation of this idea.

using Unity.Entities;

using Unity.Collections;

using Unity.Mathematics;

using Unity.Transforms;

[WorldSystemFilter(WorldSystemFilterFlags.BakingSystem)]

partial class MetaballBakingSystem : BakingSystem

{

protected override void OnUpdate()

{

EntityQuery query =

SystemAPI

.QueryBuilder()

.WithAll<LocalToWorld, Metaball>()

.Build();

NativeArray<LocalToWorld> transforms =

query

.ToComponentDataArray<LocalToWorld>(Allocator.Temp);

NativeArray<float4x4> transformArrays =

new NativeArray<float4x4>(transforms.Length, Allocator.Temp);

int i = 0;

foreach (LocalToWorld transform in transforms)

{

transformArrays[i] = math.inverse(transform.Value);

++i;

}

UnityEngine.Shader.SetGlobalMatrixArray("_MetaballTransforms", transformArrays);

}

}

Now, SetGlobalMatrixArray takes a Matrix4x4[] as its argument. transformArrays is a NativeArray<float4x4>. However, there is an automatic cast defined from float4x4 to Matrix4x4. Is the same true from NativeArray? Do your worst, C# compiler. (Sadly this isn’t getting Bursted or I could say ‘do your worst, Burst’.)

…predictably, it doesn’t do everything I want to do automatically by magic. I can try calling ToArray() on the transformArrays but it still doesn’t like it. OK, so let’s do it like this:

using Unity.Entities;

using Unity.Collections;

using Unity.Mathematics;

using Unity.Transforms;

using UnityEngine;

[WorldSystemFilter(WorldSystemFilterFlags.BakingSystem)]

partial class MetaballBakingSystem : BakingSystem

{

protected override void OnUpdate()

{

EntityQuery query =

SystemAPI

.QueryBuilder()

.WithAll<LocalToWorld, Metaball>()

.Build();

NativeArray<LocalToWorld> transforms =

query

.ToComponentDataArray<LocalToWorld>(Allocator.Temp);

Matrix4x4[] transformMatrices =

new Matrix4x4[transforms.Length];

int i = 0;

foreach (LocalToWorld transform in transforms)

{

transformMatrices[i] =

(Matrix4x4) math.inverse(transform.Value);

++i;

}

if (transformMatrices.Length > 0)

{

Shader.SetGlobalMatrixArray("_MetaballTransforms", transformMatrices);

}

}

}

That’s a managed array, meaning garbage will be collected after this, but only once so it’s whatever. That compiles and works. The check that it’s not empty is there because SetGlobalMatrixArray throws an error if you pass it a zero-length array.

OK, now I just need to read this within the shader itself. The only problem is… how will the shader know how long the array is at compile time? We will have to allocate a certain size of array in advance, even if some of it is empty. I’ll assign the array to have 16 metaballs for now inside the shader, and pass a variable that’s the actual length of the array as a material property.

Here I encountered an annoying issue. When you set a global array on the graphics card, the size of that array is defined the first time you assign it. You have to restart Unity in order to assign a different size of the array. The solution is to allocate the array at size 16 (or whatever’s defined in the shader) instead of Transforms.Length. That lets me freely add or remove metaballs up to that hardcoded limit.

Getting things wrong repeatedly

Here’s the new distance function. See if you can figure out the mistake in my approach ;)

// @block DistanceFunction

float _Smooth;

float4x4 _MetaballTransforms[16];

int _NumMetaballs;

inline float DistanceFunction(float3 pos)

{

float distance = 1./0.;

for (int i = 0; i < _NumMetaballs; ++i)

{

float sphereDist = Sphere(mul(_MetaballTransforms[i],float4(pos,1.0)),0.5);

distance = min(sphereDist,distance);

}

return distance;

}

// @endblock

We convert the 3D worldspace position into a 4D homogeneous coordinate in order to use a 4x4 matrix including translation, multiply it with the inverse transform, and then pass the transformed point to the sphere SDF. The loop finds the minimum of all the SDFs. The number 0.5 is the radius of Unity’s default sphere, so I can place the spheres in the Unity editor (with BakingOnlyEntityAuthoring MonoBehaviours attached) and get equivalent metaballs in the SDF.

But as soon as I tried this with one sphere… it wasn’t getting transformed. The sphere would always be drawn at the origin. After poking around and scratching my head for a while I realised I’d written “_Transforms” in one place and “_MetaballTransforms” in the other. Such is programming!

Now, with a slight modification, I can smooth the spheres together. Here’s one way I might do it.

float distance = 1./0.;

float secondDistance = 1./0.;

for (int i = 0; i < _NumMetaballs; ++i)

{

float sphereDist = Sphere(mul(_MetaballTransforms[i],float4(pos,1.0)),0.5);

if (sphereDist < distance)

{

secondDistance = distance;

distance = sphereDist;

} else if (sphereDist < secondDistance && sphereDist > distance) {

secondDistance = sphereDist;

}

}

return SmoothMin(distance,secondDistance,_Smooth);

// @endblock

The problem with this method is that using if instead of min incurs a risk of branch misprediction. Given DistanceFunction is essentially an inner loop in the raymarching algorithm, that’s bad. But we’ll see if it makes a noticeable impact.

Initial tests with two metaballs (viewed from outside) seemed promising, although there was a noticeable draw distance limit, and a problem where rays that passed close to the surface of the metaballs would terminate early. The next step was to increase it to seven metaballs. This… worked much less well than I hoped. Even with the raymarching loop limit cranked up to 100, the balls would pop in and out of visibility seemingly at random. And suddenly my framerate was in the mid 30s.

So much for raymarching having a negligible performance impact…

I did notice one thing while testing, which is that the framerate increased drastically when I flew near the cubes. This is because the raymarching loop could terminate much, much sooner. So maybe if I switch to negative space and sit inside the spheres, matters might improve…

…they did not. Almost nothing is visible. I’m confused, because this situation ought to be equivalent to the initial tests.

Maybe… scaling the position before testing it against the sphere of radius 0.5 is the problem? Thinking about it, yeah, the distances it would return would effectively be scaled down by the scale of the sphere! Of course! And it would lead to weird, incompatible results when comparing spheres with different scales. Oh, I thought I’d been so clever!

Getting there in the end

In fact, if I’d read Inigo’s site more carefully, I’d have noticed this problem mentioned on this page, under ‘Scale’.

Rather than scaling the spheres up and down, I could set each one with a radius stored on the Metaball component. This would be unfortunate, because it means the spheres in the editor won’t match the metaballs. Unless… I write a more subtle baking function.

So I need to write an array of sphere radii, and an array of transformation matrices that represent translation only (since who cares about rotation for spheres! Though this does limit me to only spheres and not general ellipsoids). Well, we don’t have to write the full matrices in that case: we can just write the translation vector. If I add other shapes later, I can come up with ways to bake them.

Here’s a new baking function.

using Unity.Entities;

using Unity.Collections;

using Unity.Mathematics;

using Unity.Transforms;

using UnityEngine;

[WorldSystemFilter(WorldSystemFilterFlags.BakingSystem)]

partial class MetaballBakingSystem : BakingSystem

{

protected override void OnUpdate()

{

EntityQuery query =

SystemAPI

.QueryBuilder()

.WithAll<Translation, NonUniformScale, Metaball>()

.Build();

NativeArray<Translation> translations =

query

.ToComponentDataArray<Translation>(Allocator.Temp);

NativeArray<NonUniformScale> scales =

query

.ToComponentDataArray<NonUniformScale>(Allocator.Temp);

Vector4[] translationVectors = new Vector4[16];

float[] radii = new float[16];

int i = 0;

foreach (Translation translation in translations)

{

translationVectors[i] = (Vector4) (new float4(-translation.Value, 1.0f));

++i;

}

i = 0;

foreach (NonUniformScale scale in scales)

{

//only uniform scales supported. radius is set to half the average of all scales.

radii[i] = (scale.Value.x + scale.Value.y + scale.Value.z)/6f;

++i;

}

Shader.SetGlobalVectorArray("_MetaballTranslations", translationVectors);

Shader.SetGlobalFloatArray("_MetaballRadii", radii);

Shader.SetGlobalInteger("_NumMetaballs", translations.Length);

}

}

The SDF needs to change a bit to match this:

float _Smooth;

uniform float4 _MetaballTranslations[16];

uniform float _MetaballRadii[16];

uniform int _NumMetaballs;

inline float DistanceFunction(float3 pos)

{

float distance = 1./0.;

float secondDistance = 1./0.;

for (int i = 0; i < _NumMetaballs; ++i)

{

float sphereDist = Sphere(pos + _MetaballTranslations[i].xyz, _MetaballRadii[i]);

if (sphereDist < distance)

{

secondDistance = distance;

distance = sphereDist;

} else if (sphereDist < secondDistance && sphereDist >= distance) {

secondDistance = sphereDist;

}

}

return -SmoothMin(distance,secondDistance,_Smooth);

}

This led to some shading artifacts, so I tried out a version that just uses SmoothMin on every single sample.

float _Smooth;

uniform float4 _MetaballTranslations[16];

uniform float _MetaballRadii[16];

uniform int _NumMetaballs;

inline float DistanceFunction(float3 pos)

{

float distance = 1./0.;

float secondDistance = 1./0.;

for (int i = 0; i < _NumMetaballs; ++i)

{

float sphereDist = Sphere(pos + _MetaballTranslations[i].xyz, _MetaballRadii[i]);

distance = SmoothMin(distance,sphereDist,_Smooth);

}

return -distance;

}

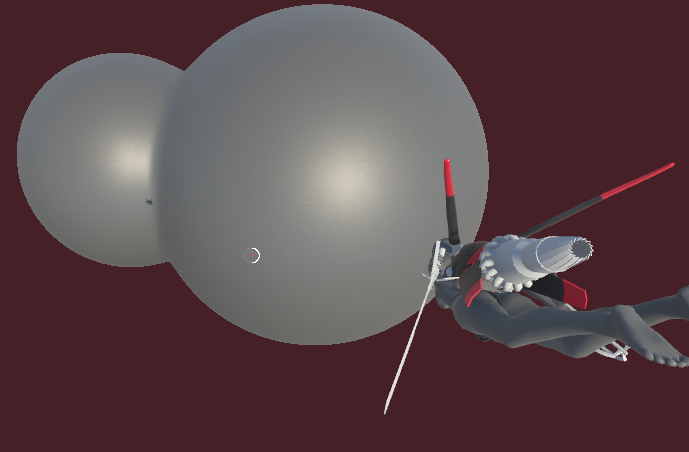

With these changes… finally, I can sit inside of some orbs.

The performance impact is… not crushing, but definitely noticeable when I fullscreen the game, basically cutting the framerate in half from 150fps to 70fps. This will only get worse as I add more metaballs to the SDF. One solution to this would potentially be to create some large bounding spheres which can be used to skip over groups of metaballs.

I’m also wasting a lot of calculations repeatedly taking the logarithm and then undoing it with exponentiation. In fact, a better implementation using exponential smoothmin would look like…

for (int i = 0; i < _NumMetaballs; ++i)

{

float sphereDist = Sphere(pos + _MetaballTranslations[i].xyz, _MetaballRadii[i]);

expDistance += exp( - _Smooth * sphereDist);

}

return log(expDistance)/_Smooth;

Doing this immediately nets me 20fps back at fullscreen.

An alternative approach to rendering this would be to generate a triangle mesh approximating the implicit surface. The result would be something akin to Deep Rock Galactic. However, having gone to such efforts to implement raymarching, I’m going to save such graphics optimisations for after I’ve got other parts of the game working.

The verdict

Getting raymarching working presented some puzzles, but thanks to uRaymarching, I am saved the trouble of actually writing my own raymarching shader. (Something I would very much like to do when more time is available, mind.) Figuring out the correct signed distance field was also a puzzle, but I learned a bunch about interacting with shaders along the way. Now that work is done, I like the result.

The next step is to use this same SDF calculation to implement collisions! This is, after all, theoretically the huge advantage of having an SDF on hand. I’ll go into this in the next devlog.

This doesn’t mean convex colliders are right out, if we get them working. Ideally, a level could have both organic and inorganic structures. I could test for collisions against the SDF, and use Psyshock for the more meshy objects.

There are some potential problems with SDF rendering, apart from performance. One is that whenever the camera goes outside the ‘void’ space defined in the SDF, the screen becomes completely opaque. In contrast to the usual videogame world that consists of a paper-thin layer over a void, SDFs are solid inside.

A solution to this might be to add a box or cylinder or something that’s attached to the camera and boolean’d out of the SDF, essentially cutting a ‘window’ into the level when the camera is outside. Since the SDF takes coordinates in world space, this is somewhat tricky to implement, but I may be able to have a system that copies the camera’s transformation matrix into the shader every frame. I could detect whether we’re in a wall by evaluating the SDF again in a post-processing effect, then slightly grey out the level (or something similar) to indicate we’re looking through a wall.

uRaymarching only supports two shaders in the URP by default. Since I want to use a custom Fresnel-based shader on the walls of the level, this is a complication. However, I may be able to apply such lighting as a post-processing effect.

All this to come in the next few days! See you soon!

Comments