So, the time has come! Let’s get the ‘thrust’ in THRUST//DOLL…

The primary mode of movement in THRUST//DOLL is to point your camera in a direction and either thrust in that direction or attach a grappling hook.

So the first element of the movement system has to be an orbiting camera controller. We’ve already laid the groundwork for this in the rotation system we built last time.

Simply put, every frame we need to read the mouse delta and rotate the camera around the centre. The centre won’t be exactly on the model, since we want the player to be able to see what they’re aiming at. So we need to move both the look target and the camera. But lets start by moving the camera.

The orbiting is a little complex because we’re not simply rotating, which could be done with two quaternions to represent rotation around the X and Y axes, but moving over the surface of a sphere. In a normal first or third person camera, if you rotate the camera to point straight up, it stops rather than turn upside down. The camera remains gimbal-locked, pointing in a fixed direction and only able to roll. This is intuitive to most users, but I think it would be cool to be able to rotate up and over… well, we’ll see.

Lets dig in…

Contents

Contents

Getting control of the camera

We can make some progress with the character controller in the Latios Framework fireworks demo. In this design, the controller is split into a few systems. There is a PlayerGameplayReadInputSystem which reads mouse and keyboard information and stashes them in a component called DesiredAction, which says for example that the player wishes to turn a certain direction and whether they want to jump, crouch or reload.

This is then consumed (for our purposes) by AimSystem, which tracks the orientation of the player. Sure enough, it clamps the vertical rotation to prevent the player from turning upside down. It also imposes a maximum turning speed horizontally. We might want to change that maths, but this seems like a reasonable starting point.

AimSystem does not, however, move the camera itself. That functionality comes in the… promisingly named CameraManagerCameraSyncHackSystem. Its approach seems… rather overcomplicated. I’m not sure why it’s doing all the stuff it’s doing.

Instead, let’s just get the main camera with UnityEngine.Camera.main, which should be cached for performance reasons.

Now, how to set up the scene? Let’s create a new scene, with an ECS subscene containing the parts that will be universal per level. We won’t worry too much about transitioning between scenes for now. ECS stuff is only loaded if it’s in a Subscene. The way the editor works is that toggling a Subscene on or off only determines whether it’s open for editing, and if it’s in the scene it will load either way, so until I’ve taken manual control of the loading of scenes and subscenes, I’ll need to create both a new Scene and a new Subscene. Sigh. 仕方がない。

Anyway, having done all that, and gotten through updating Unity (turns out you pretty much have to delete your Library folder and force Unity to reimport everything…), what components do we need? Let’s follow the pattern in the Latios controller, and write a component with the intended actions. And further, let’s write a component with the state of the character.

The character should track…

- an orientation—but that’s just stored in the transform.

- a velocity—this can be its own component

- whether the grappling hook is attached—we’ll worry about this later

When the player thrusts in a particular direction, the doll should orient itself towards the target direction and then add an impulse to the velocity. Over time, the doll should then gradually realign to the actual direction of motion.

The camera controller will store…

- a rotation orientation (quaternion) which holds its orientation

- a distance from the player (fixed for now)

This will be used to get a world space position. We also need to track a camera target, which as Masahiro Sakurai warns, should not necessarily centre on the character.

OK, let’s get some code down!

A note on formatting

Last time the code may have been a bit hard to read due to very long lines (SystemAPI.This().That().SomethingElse<Type>().Build()). I’ve taken a couple of measures to address that.

Firstly code blocks will be wider. Secondly, I’ve decided to adopt an Elm-style indentation pattern in my code. This isn’t really strictly what the C# style guide says, but I find it makes the code considerably more readable, and it should help with Git diffs too. The main thing that might be surprising is beginning lines with commas, like this:

{ foo

, bar

, bey

}

…which looks weird at first but is actually a really nice way to list arguments and fields in my experience.

Rotating an camera

First, components:

struct CameraController : IComponentData

{

public quaternion Orientation;

public float Distance;

}

public struct Intent : IComponentData

{

public float2 Rotate;

}

This Intent will gradually fill up with more desired actions. Or it may be better to keep it separate to minimise the amount of data that has to be read by different systems. We’ll see.

The CameraController keeps the entity-side view of the camera’s status. This is arguably unnecessary for orientation since it can be read from the camera GameObject, but the idea of ‘distance from target’ (truck in/out) is something we need to store, and later we may want to store a different rotation to the actual camera object itself.

My first experiment was to simply treat the mouse movements as Euler angles, convert them to a quaternion, and apply it. The result is predictably complete chaos, but it proves that this approach does give me access to the camera:

partial class InputReadingSystem : SystemBase

{

protected override void OnCreate()

{

EntityManager

.AddComponent<Intent>(SystemHandle);

}

protected override void OnUpdate()

{

var mouse = Mouse.current;

float2 delta = mouse.delta.ReadValue() * 0.001;

Intent intent = new Intent { Rotate = delta };

SystemAPI

.SetComponent<Intent>(SystemHandle, intent);

}

}

[UpdateAfter(typeof(InputReadingSystem))]

public partial class CameraControllerSystem : SystemBase

{

private Camera _camera;

protected override void OnCreate()

{

EntityManager

.AddComponent<CameraController>(SystemHandle);

SystemAPI

.SetComponent<CameraController>

( SystemHandle

, new CameraController

{ Orientation = quaternion.identity

, Distance = 5f

}

);

_camera = Camera.main;

}

protected override void OnDestroy()

{

}

protected override void OnUpdate()

{

float2 intendedRotation =

SystemAPI

.GetSingleton<Intent>()

.Rotate;

quaternion rotation =

quaternion

.EulerXYZ

( 0f

, intendedRotation.y

, intendedRotation.x

);

RefRW<CameraController> controller =

SystemAPI

.GetComponentRW<CameraController>(SystemHandle);

quaternion newOrientation =

math.mul

( rotation

, controller.ValueRO.Orientation

);

controller.ValueRW.Orientation =

newOrientation;

_camera.transform.localRotation =

newOrientation;

}

}

Nothing complicated is happening here. We’re reading the mouse delta, multiplying it by a hardcoded sensitivity, then packing it into a component that belongs to the InputReadingSystem. After that, we are reading the rotation from that component using GetSingleton, and turning it into a quaternion using Euler angles. We rotate the current orientation with that quaternion and then stash it on the component and copy it back to the camera. (Helpfully, the vector and quaternion structs in the Unity.Mathematics library are automatically cast into the corresponding classes used in the UnityEngine namespace.)

The resulting first person motion is… super screwy! Whatever sequence of Euler angles you pick, the camera rolls around in a way that’s hard to comprehend. Not surprising though. This is a silly way to write a camera controller. We’re trying to have our cake and eat it by combining quaternions and Euler angles in this way.

Also, this is currently a first person controller, since we’re rotating the camera around its origin (the point of view). What we actually want is a camera that orbits a target point. The camera has a helpful LookAt method, but if we want to stay in pure entities code, we can create an entity to represent the camera, parent it to the target, and rotate the target.

The actual logic we want is that, when the player moves the mouse left and right, the camera pivots so that it orbits left or right. Similarly for up and down. Usually a game camera essentially moves in YX Euler angles, where first pitch is applied, then yaw (roll is rarely a consideration). This means that yaw has a consistent axis, but pitch doesn’t. This makes it tricky for directly converting Euler angles into quaternions to apply as deltas.

We could therefore consider storing the camera’s Euler angles directly as floats, and reconstruct the quaternion on the fly. This means we modify CameraController to…

struct CameraController : IComponentData

{

public float Pitch;

public float Yaw;

public float Distance;

}

and use this logic in CameraControllerSystem:

protected override void OnUpdate()

{

float2 intendedRotation =

SystemAPI

.GetSingleton<Intent>()

.Rotate;

RefRW<CameraController> controller =

SystemAPI

.GetComponentRW<CameraController>(SystemHandle);

controller.ValueRW.Yaw += intendedRotation.x;

controller.ValueRW.Pitch += intendedRotation.y;

quaternion rotation =

quaternion

.EulerXYZ

( controller.ValueRO.Pitch

,controller.ValueRO.Yaw

,0f

);

_camera.transform.localRotation = rotation;

}

This seems to work as intended, although we’ll need to set up facility to tune the sensitivity. However, there is now an issue: if the mouse reaches the edge of the screen, you stop rotating. So we need to capture the mouse. We only want to do this within the level, not the configurator. This can easily be done with

UnityEngine.Cursor.lockState = UnityEngine.CursorLockMode.Locked;

For now, until scene handling code is set up, I have placed this in the OnCreate of the InputHandlingSystem.

Locking the cursor will make it difficult to leave our game! Ultimately there will be some kind of game menu which unlocks the cursor and allows the user to quit, but for now, hitting escape will break us out of cursor lock so we can leave it this way for development purposes.

The only remaining issue is that we have an inverted vertical axis. But that is an easy fix, changing controller.ValueRW.Pitch += intendedRotation.y to a -= instead. At this point we start to feel like we have a standard FPS camera controller. (We want third person, but we’re getting to that!)

Now we have another interesting problem. We can turn the camera upside down. This is rather unintuitive, so lets clamp the pitch to the \([-\frac{pi}{2},\frac{pi}{2}]\) interval.

controller.ValueRW.Pitch = math.clamp(controller.ValueRO.Pitch, -math.PI/2, math.PI/2);

OK, so we have a basic FPS-style controller. Now we need to make it third person.

First, we’ll create an entity that’s the camera look target. It has a tag component Target. Secondly we need an entities-friendly way to calculate the position of the camera. So let’s create another entity, with a tag component Camera, whose orientation is what gets copied to the camera itself. We can take advantage of the existing ParentSystem by simply parenting the camera to the target and rotating the target, which will save doing any more complicated lookup code. This component gets to have a CameraTransform tag. (We don’t want a namespace collision so I won’t call it Camera. At some point soon I should implement a namespace for this project, actually.)

How should the target relate to the doll? We could parent it, but we would like the doll to be able to rotate independent of the camera sometimes. Let’s worry about that later and start by just placing the target somewhere. Then we parent the camera to it.

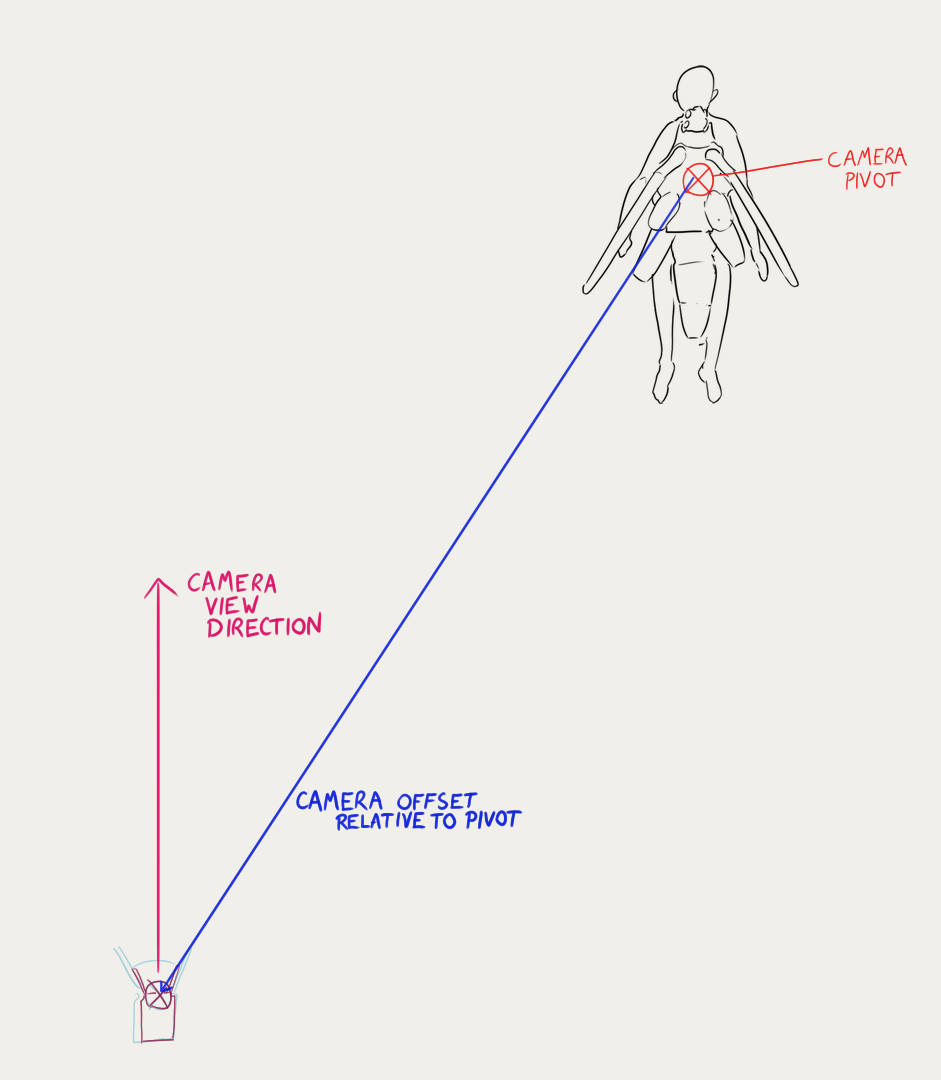

I originally believed that I would have to place the target somewhere slightly to the left of the doll—but with parenting, it is not necessary to have the ‘target’ actually be what the camera points at. We can arrange the scene like this instead:

So we have an entity where the camera is, we rotate the pivot, and we update the camera to match the transformation of this entity. The doll will always be at the same position on the screen.

There’s only one small problem implementing this arrangement which is that the camera transform will only be updated to follow its parent by the ParentSystem, so we’ll need to either implement the parent transform ourselves, or save updating the camera gameobject until after that point. That’s easy enough to do though, we can just an UpdateAfter directive.

First we have a system that runs before the parent system, rotating the pivot the way we were just rotating the camera. Then we have a system that runs after the parent system, which copies the camera transform to the actual camera.

(An alternative might be to set a RotationPivot component on an entity, in which case we’d no longer need a pivot entity, we can just set a RotationPivot component on the CameraTransform and track the rotation there. However, this doesn’t really seem like it would save a lot of complexity.)

Now, at this point, ideally we’d just copy the local-to-world matrix of this component onto the camera. Alas, Unity won’t let it be that simple. We will have to update the position and rotation. Unity needs to give us proper DOTS-native camera access already…

(The reason for the complexity in the Latios version is that it is jobified. We won’t worry about jobifying our code for now.)

The resulting code is…

Camera controller system & camera update system

using Unity.Entities;

using Unity.Mathematics;

using Unity.Transforms;

using Unity.Burst;

[UpdateAfter(typeof(InputReadingSystem))]

[BurstCompile]

partial struct CameraControllerSystem : ISystem

{

[BurstCompile]

public void OnCreate(ref SystemState state)

{

state.EntityManager

.AddComponent<CameraController>(state.SystemHandle);

SystemAPI

.SetComponent<CameraController>

( state.SystemHandle

, new CameraController

{ Pitch = 0f

, Yaw = 0f

, Distance = 5f

}

);

state.RequireForUpdate<Intent>();

state.RequireForUpdate<CameraPivot>();

}

[BurstCompile]

public void OnDestroy(ref SystemState state)

{

}

[BurstCompile]

public void OnUpdate(ref SystemState state)

{

float2 intendedRotation =

SystemAPI

.GetSingleton<Intent>()

.Rotate;

RefRW<CameraController> controller =

SystemAPI

.GetComponentRW<CameraController>

( state.SystemHandle

);

RefRW<Rotation> pivot =

SystemAPI

.GetComponentLookup<Rotation>()

.GetRefRW

(SystemAPI.GetSingletonEntity<CameraPivot>()

, false);

controller.ValueRW.Yaw += intendedRotation.x;

controller.ValueRW.Pitch -= intendedRotation.y;

controller.ValueRW.Pitch =

math.clamp

( controller.ValueRO.Pitch

, -math.PI/2

, math.PI/2

);

pivot.ValueRW.Value =

quaternion.EulerXYZ

( controller.ValueRO.Pitch

, controller.ValueRO.Yaw

, 0f

);

}

}

using Unity.Entities;

using Unity.Transforms;

[UpdateInGroup(typeof(LateSimulationSystemGroup))]

partial class CameraUpdateSystem : SystemBase

{

private UnityEngine.Camera _camera;

protected override void OnCreate() {

_camera = UnityEngine.Camera.main;

}

protected override void OnUpdate() {

Entity cameraTransform =

SystemAPI

.GetSingletonEntity<CameraTransform>();

LocalToWorld transform =

SystemAPI

.GetComponent<LocalToWorld>(cameraTransform);

_camera.transform.position = transform.Position;

_camera.transform.rotation = transform.Rotation;

}

}

Curiously, with this configuration, moving the camera adds about 1.5ms to the frame time. I peered at the profiler a bit but I’m still not entirely sure why this might be. The systems written here do not take up a large part of the frame time, so perhaps it is something else? Anyway, that is to worry about later. It might even disappear once we leave the editor!

In any case, the system works: we can pivot around the doll in the way expected.

This example already shows a couple of issues with this system. The camera can, not surprisingly, clip into geometry. And when you gimbal-lock the camera by pushing it all the way down, we get this strange effect of circling the doll.

However, this suffices for a proof of concept! Let’s add some movement.

Adding movement

Velocity

The most basic thing that we need for movement is a velocity. The doll certainly won’t be the only thing with a velocity—indeed this is a paradigmatic case for where Entity Component Systems shine. So lets create a Velocity component and a Velocity System that moves everything according to its velocity. We’ll also need to update the position of the pivot to follow the character now the character is moving.

This could hardly be simpiler…

Velocity System

using Unity.Entities;

using Unity.Transforms;

using Unity.Burst;

[UpdateBefore(typeof(TransformSystemGroup))]

[BurstCompile]

partial struct VelocitySystem : ISystem

{

[BurstCompile]

public void OnCreate(ref SystemState state)

{

}

[BurstCompile]

public void OnDestroy(ref SystemState state)

{

}

[BurstCompile]

public void OnUpdate(ref SystemState state)

{

float deltaTime =

SystemAPI

.Time

.DeltaTime;

new VelocityJob

{ DeltaTime = deltaTime

}

.ScheduleParallel();

}

}

[BurstCompile]

partial struct VelocityJob : IJobEntity

{

public float DeltaTime;

void Execute(in Velocity velocity, ref Translation position)

{

position.Value += velocity.Value * DeltaTime;

}

}

Thrust

This will do very little if we don’t have a way to set our velocity. For the minimum possible version of the THRUST//DOLL movement system, we will simply add a fixed acceleration in that direction for a brief period when the user clicks.

This means we need to handle durations. We could either store the time when the user clicks and subtract it from the current time on each frame to test whether to stop applying the acceleration, or we can track a ‘lifetime’ variable.

In fact, this ‘limited duration effect’ concept is likely something we want to reuse. So lets make a general component for a time limited status. It will essentially count up to its lifetime, and once it’s reached the end of its life, register a command in a buffer to delete itself.

Except it is unfortunately not that simple: we don’t want to delete the entire entity, just the status. So if we represent ‘duration of effect’ as a component, we need to somehow link it to the actual status.

What we’re really looking for is something like an interface, but is there a way to query by interface in DOTS? Perhaps we can create an Aspect along with an Interface, and give that aspect a field with a reference to the interface.

So, here we go, let’s see if this works.

public interface IStatus : IComponentData

{

public float TimeRemaining;

}

Mistake 1: interfaces can’t specify member fields. Whoops. Better make this a property.

using Unity.Entities;

readonly partial struct StatusAspect : IAspect

{

readonly RefRW<IStatus> status;

}

Mistake 2:

Assets/Scripts/Aspects/StatusAspect.cs(5,29): error CS0453: The type ‘IStatus’ must be a non-nullable value type in order to use it as parameter ‘T’ in the generic type or method ‘RefRW<T>’

Welp. So much for that. If we can’t store a reference to it, we can’t put it in an Aspect, and we can’t define an API. But I’m not entirely surprised this didn’t work—how could the compiler figure out what specific place to jump in memory to read a certain value, given only knowledge of an interface it implements? High-performance C# does not like reflection.

What about a generic Aspect?

While rereading this later, it occurred to me that maybe I was simply declaring a generic aspect wrong. If I wrote a true generic with a type parameter, perhaps it would work?

using Unity.Entities;

readonly partial struct StatusAspect<T> : IAspect

where T: unmanaged, IComponentData, IStatus

{

readonly RefRW<T> Status;

public float TimeRemaining

{

get => Status.ValueRO.TimeRemaining;

set => Status.ValueRW.TimeRemaining;

}

}

Unfortunately this resulted in an error saying ‘Generic Aspects are not supported’. So that’s a non-starter.

All is not lost, though, we’re just looking in the wrong place. Instead, we can create a generic job with the code we want to reuse—besides parallelisation, this is one of the major reasons for generic jobs. Unfortunately that won’t let us write a generic system that handles everything with a duration, and we’ll have to schedule a job for every possible variant.

So here’s a new version of the IStatus interface:

public interface IStatus : IComponentData

{

public float TimeRemaining { get; set; };

}

And here’s the job to process it:

using Unity.Entities;

public struct StatusCountdownJob<T> : IJobEntity

where T : IStatus

{

public float DeltaTime;

public EntityCommandBuffer.ParallelWriter ECB;

void Execute([ChunkIndexInQuery] int chunkIndex, Entity entity, ref T status) {

status.TimeRemaining -= DeltaTime;

if (status.TimeRemaining < 0) {

ECB.RemoveComponent<T>(chunkIndex, entity);

}

}

}

Damn, feels almost like we’re writing Rust now! (I said, naively.) So now we can create a job to countdown any temporary status effects we wish to apply in the future.

Now let’s actually create a way to set this thrust. We’ll add a bool component to the ‘intent’ which will record whether the user wants to thrust. If this is detected, we will set two components: a thrust and a thrust cooldown. Already getting some use out of our new interface!

Here’s a component for thrust…

using Unity.Entities;

using Unity.Mathematics;

public struct Thrust : IStatus

{

public float TimeRemaining {get; set;}

public float3 Acceleration;

}

And here’s a component for a thrust cooldown…

using Unity.Entities;

public struct ThrustCooldown : IStatus

{

public float TimeRemaining {get; set;}

public float InverseDuration;

}

The inverse duration field will be used to draw an interface element indicating the cooldown.

Next, a System to read mouse presses and apply these effects. We can get whether the mouse has just been clicked with the wasPressedThisFrame property.

The system that handles this has to touch quite a bit of data. First we need a thrust direction vector. This comes from the orientation of the camera pivot. In Unity, the camera faces in the z direction, so we need to rotate a vector pointing in the z direction with the relevant quaternion.

quaternion rotation =

SystemAPI.GetComponent<Rotation>

( SystemAPI.GetSingletonEntity<CameraPivot>()

)

.Value;

float3 acceleration =

math.mul

( rotation

, new float3(0f, 0f, 10f)

);

Todo soon: create a config entity which will contain parameters like mouse sensitivity and acceleration.

Having generated the acceleration, we need to register a command to add the appropriate components to the player entity. The question is which ECB to put this command in. Ideally we’d do this part of input handling early in the frame, so we can use it in the ‘simulation’ in the very same frame. This means we may need to move our input reading system back to the initialisation system group.

However, now we’re using wasPressedThisFrame, we probably want to actually poll inputs as late as possible. Otherwise, is there some possibilty that we’d randomly drop inputs towards the end of the frame? On some further investigation, this depends on the InputSystem’s updateMode, which by default runs before GameObjects are updated, so after the Initialisation group but before the systems’ OnUpdate. So ‘was pressed this frame’ means ‘was pressed between the last two gameobject update steps’ We can take control of this and decide where to update the inputs ourselves, but I think I’m all right with that for now.

We have a couple of command buffers that run before the TransformSystemGroup we could use, but to make this work, we’ll need to shift the InputReadingSystem back before them, and that’s tricky without actually putting the InputReadingSystem inside those groups, which isn’t really appropriate. I am beginning to think the Latios Framework means of explicit system ordering might be a better idea…

Another option might be to use enableable components, which can be added and removed without a structural change. But for now, let’s not prematurely optimise, and simply add the acceleration components at the end of the frame. So when you click, it will be a frame delay before you start accelerating. Given we probably want a wind-up animation anyway, I don’t think this is an issue.

Thrust Start System

using Unity.Entities;

using Unity.Burst;

using Unity.Transforms;

using Unity.Mathematics;

[UpdateAfter(typeof(InputReadingSystem))]

[BurstCompile]

partial struct ThrustStartSystem : ISystem

{

[BurstCompile]

public void OnCreate(ref SystemState state)

{

state.RequireForUpdate<Character>();

state.RequireForUpdate<CameraPivot>();

state.RequireForUpdate<Intent>();

}

[BurstCompile]

public void OnDestroy(ref SystemState state)

{

}

[BurstCompile]

public void OnUpdate(ref SystemState state)

{

if (SystemAPI.GetSingleton<Intent>().Thrust)

{

quaternion rotation =

SystemAPI

.GetComponent<Rotation>

( SystemAPI.GetSingletonEntity<CameraPivot>()

)

.Value;

float3 acceleration = math.mul(rotation,new float3(0f, 0f, 10f ));

Entity player = SystemAPI.GetSingletonEntity<Character>();

EntityCommandBuffer ecb =

SystemAPI

.GetSingleton<EndSimulationEntityCommandBufferSystem.Singleton>()

.CreateCommandBuffer(state.WorldUnmanaged);

ecb.AddComponent(player,

new Thrust

{

TimeRemaining = 0.5f,

Acceleration = acceleration

});

ecb.AddComponent(player,

new ThrustCooldown {

TimeRemaining = 5f,

InverseDuration = 0.2f

});

}

}

}

That bit went pretty easy. From here on it gets horrible.

Adventures in generics

Now we need to do something with these components we’ve added. This was my first attempt:

using Unity.Entities;

[BurstCompile]

partial struct ThrustAccelerationSystem : ISystem

{

[BurstCompile]

public void OnCreate(ref SystemState state)

{

}

[BurstCompile]

public void OnDestroy(ref SystemState state)

{

}

[BurstCompile]

public void OnUpdate(ref SystemState state)

{

float deltaTime = SystemAPI.Time.DeltaTime;

new ThrustAccelerationJob { DeltaTime = deltaTime }

.Schedule();

}

}

public struct ThrustAccelerationJob : IJobEntity

{

public float DeltaTime;

void Execute(in Thrust thrust, ref Velocity velocity)

{

velocity.Value += thrust.Acceleration * DeltaTime;

}

}

That’ll do for actually doing the acceleration while the systems are alive, now we need to delete them. Maybe we could write something like…

using Unity.Entities;

[BurstCompile]

partial struct StatusTickSystem : ISystem

{

[BurstCompile]

public void OnCreate(ref SystemState state)

{

}

[BurstCompile]

public void OnDestroy(ref SystemState state)

{

}

[BurstCompile]

public void OnUpdate(ref SystemState state)

{

float deltaTime = SystemAPI.Time.DeltaTime;

new StatusCountdownJob<Thrust>

{ DeltaTime = deltaTime

}

.Schedule();

new StatusCountdownJob<ThrustCooldown>

{ DeltaTime = deltaTime

}

.Schedule();

}

}

partial struct StatusCountdownJob<T> : IJobEntity

where T: IStatus

{

public float DeltaTime;

public EntityCommandBuffer.ParallelWriter ecb;

void Execute (Entity entity, ref T status)

{

status.TimeRemaining -= DeltaTime;

if (status.TimeRemaining < 0)

{

ecb.RemoveComponent<T>(entity);

}

}

}

That is nice and simple and it looks like it should work, right?

Unfortunately, it turns out that IJobEntity is actually invoking code generation behind the scenes, and it doesn’t support generics. The error message did not make that clear at all, and I’m going to reproduce it here in case someone googles this…

Assets/Scripts/Systems/Level/StatusTickSystem.cs(26,13): error CS0315: The type ‘StatusCountdownJob<Thrust>’ cannot be used as type parameter ‘T’ in the generic type or method ‘IJobEntityExtensions.Schedule<T>(T)’. There is no boxing conversion from ‘StatusCountdownJob<Thrust>’ to ‘Unity.Entities.InternalCompilerInterface.IIsFullyUnmanaged’.

The solution suggested in this thread is to write your own batch iteration. At this point I think we’ve lost pretty much anything we’d have gained by making something reusable and generic, but in the interests of learning how the systems work, I decided to turn my hand to it anyway.

So what does an IJobChunk actually do? Like IJobEntity we have to write an Execute function. Unity passes it a chunk, which is a collection of entities with the same archetype. The job receives ‘type handles’ identifying specific components, and entities. Using these type handles, it can generate a NativeArray (basically a normal C array) containing all the data corresponding to that component in the chunk. We can then iterate through this data using a standard for loop, in a way that makes the CPU cache happy.

The useEnabled and chunkEnabledMask parameters are necessary, and deal with enableable components. The mask is essentially a series of bits saying whether a component is enabled or not. I took the easy option and made it so that it could only be used with non-enableable components, rather than writing code to handle this bitmask.

Here’s the job I came up with:

using Unity.Assertions;

using Unity.Entities;

using Unity.Burst;

using Unity.Burst.Intrinsics;

using Unity.Collections;

[BurstCompile]

public partial struct StatusCountdownJob<T> : IJobChunk

where T : unmanaged, IComponentData, IStatus

{

public float DeltaTime;

public EntityCommandBuffer.ParallelWriter ECB;

public ComponentTypeHandle<T> StatusHandle;

public EntityTypeHandle EntityHandle;

public void Execute(in ArchetypeChunk chunk, int unfilteredChunkIndex,

bool useEnabledMask, in v128 chunkEnabledMask) {

//not usable with enableable components

Assert.IsFalse(useEnabledMask);

NativeArray<T> statuses =

chunk

.GetNativeArray<T>(ref StatusHandle);

NativeArray<Entity> entities =

chunk

.GetNativeArray(EntityHandle);

for (int i = 0; i < chunk.Count; ++i)

{

T status = statuses[i];

status.TimeRemaining -= DeltaTime;

statuses[i] = status;

if (status.TimeRemaining < 0) {

ECB.RemoveComponent<T>

( unfilteredChunkIndex

, entities[i]

);

}

}

}

}

In most examples I’ve seen, T : struct is written instead of T : unmanaged. However, this resulted in an error message:

Assets/Scripts/GenericJobs/StatusCountdownJob.cs(21,41): error CS8377: The type ‘T’ must be a non-nullable value type, along with all fields at any level of nesting, in order to use it as parameter ‘T’ in the generic type or method ‘ArchetypeChunk.GetNativeArray<T>(ref ComponentTypeHandle<T>)’

I asked about this on the Unity Discord and was advised to change struct to unmanaged. This fixed the problem. I believe this is due to a change introduced in Entities 1.0.

Now we can actually use these generic jobs in code. It ends up with a lot of boilerplate:

using Unity.Entities;

using Unity.Burst;

[BurstCompile]

partial struct StatusTickSystem : ISystem

{

[BurstCompile]

public void OnCreate(ref SystemState state)

{

}

[BurstCompile]

public void OnDestroy(ref SystemState state)

{

}

[BurstCompile]

public void OnUpdate(ref SystemState state)

{

EntityTypeHandle entityHandle =

SystemAPI.GetEntityTypeHandle();

ComponentTypeHandle<Thrust> thrustHandle =

SystemAPI

.GetComponentTypeHandle<Thrust>(false);

ComponentTypeHandle<ThrustCooldown> thrustCooldownHandle =

SystemAPI

.GetComponentTypeHandle<ThrustCooldown>(false);

float deltaTime = SystemAPI.Time.DeltaTime;

EntityQuery thrustQuery =

SystemAPI

.QueryBuilder()

.WithAll<Thrust>()

.Build();

EntityQuery thrustCooldownQuery =

SystemAPI

.QueryBuilder()

.WithAll<ThrustCooldown>()

.Build();

var ecbSystem =

SystemAPI

.GetSingleton<EndSimulationEntityCommandBufferSystem.Singleton>();

var thrustJob = new StatusCountdownJob<Thrust>

{ DeltaTime = deltaTime

, ECB = ecbSystem

.CreateCommandBuffer(state.WorldUnmanaged)

.AsParallelWriter()

, StatusHandle = thrustHandle

, EntityHandle = entityHandle

};

state.Dependency =

thrustJob

.Schedule

( thrustQuery

, state.Dependency

);

var thrustCooldownJob = new StatusCountdownJob<ThrustCooldown>

{ DeltaTime = deltaTime

, ECB = ecbSystem

.CreateCommandBuffer(state.WorldUnmanaged)

.AsParallelWriter()

, StatusHandle = thrustCooldownHandle

, EntityHandle = entityHandle

};

state.Dependency =

thrustCooldownJob

.Schedule

( thrustCooldownQuery

, state.Dependency

);

}

}

Horrible. And we’re going to have to add all that for every single StatusCooldownJob we might come up with in the future.

Worse, all this boilerplate can lead to hard to fix mistakes. I lost a day to trying to unpick a strange out of bounds error. See if you can spot the mistake in the next code block, which would come at the end of OnUpdate.

var thrustCooldownJob = new StatusCountdownJob<Thrust>

{ DeltaTime = deltaTime

, ECB = ecbSystem

.CreateCommandBuffer(state.WorldUnmanaged)

.AsParallelWriter()

, StatusHandle = thrustHandle

, EntityHandle = entityHandle

};

state.Dependency =

thrustCooldownJob

.Schedule

( thrustCooldownQuery

, state.Dependency

);

Seen it? Yeah, me neither, for like a day. But that <Thrust> should be <ThrustCooldown> and that thrustHandle should be thrustCooldownHandle. It turned out I’d created a job with the Thrust variant type and handle, but then passed it an entity query for ThrustCooldown. Whoops.

Perhaps we can improve matters a bit by writing a generic function to schedule a generic job. It would go something like this…

[BurstCompile]

void scheduleJob<T>(ref SystemState state)

where T: unmanaged, IComponentData, IStatus

{

ComponentTypeHandle<T> statusHandle =

SystemAPI

.GetComponentTypeHandle<T>(false);

EntityTypeHandle entityHandle =

SystemAPI

.GetEntityTypeHandle();

float deltaTime =

SystemAPI

.Time

.DeltaTime;

EntityQuery statusQuery =

SystemAPI

.QueryBuilder()

.WithAll<T>()

.Build();

var ecbSystem =

SystemAPI

.GetSingleton<EndSimulationEntityCommandBufferSystem.Singleton>();

var job = new StatusCountdownJob<T>

{ DeltaTime = deltaTime

, ECB = ecbSystem

.CreateCommandBuffer(state.WorldUnmanaged)

.AsParallelWriter()

, StatusHandle = statusHandle

, EntityHandle = entityHandle

};

state.Dependency =

job

.ScheduleParallel

( statusQuery

, state.Dependency

);

}

Try compiling this and, surprise surprise! You can’t use SystemAPI with generics either.

I think a refactor is in order, because wow does this code stink. But it does, at least, finally work now so let’s get something else implemented.

Making the camera follow the character

At this point if you aim the camera and click, the doll flies off into the distance. We need the camera pivot to follow.

That’s easy enough to do at least. We could add a few lines to camera controller system, but actually it’s a little more complicated than that. We want the following sequences of systems to run to deal with camera orientation:

InputReadingSystem—gets the mouse orientation and whether to thrust.CameraControllerSystem—pivots the camera to match the new orientation.ThrustStartSystem—signals to initiate a thrust on the next frame. Depends onCameraControllerSystemhaving run to get updated orientation.

Separately we have the ‘kinematics chain’:

ThrustAccelerationSystem—accelerates the doll if she’s thrusting, changing her velocity.VelocitySystem—updates the position of the doll according to her velocity.

We want to move the camera pivot after VelocitySystem. While we could force CameraControllerSystem to update after VelocitySystem, this makes everything very tightly coupled so maybe not the best way to go about it. Instead, I’m going to make a new CameraPivotTranslationSystem to move the camera pivot. For now this will simply copy the position of the doll.

Juggling all these Systems is getting unwieldy. I think we might want to create some system groups soon.

Here’s CameraPivotTranslationSystem:

using Unity.Entities;

using Unity.Transforms;

using Unity.Jobs;

using Unity.Burst;

[UpdateAfter(typeof(VelocitySystem))]

[UpdateBefore(typeof(TransformSystemGroup))]

[BurstCompile]

partial struct CameraPivotTranslationSystem : ISystem

{

[BurstCompile]

public void OnCreate(ref SystemState state)

{

}

[BurstCompile]

public void OnDestroy(ref SystemState state)

{

}

[BurstCompile]

public void OnUpdate(ref SystemState state)

{

ComponentLookup<Translation> translationLookup =

SystemAPI

.GetComponentLookup<Translation>(false);

Entity characterEntity =

SystemAPI

.GetSingletonEntity<Character>();

Entity pivotEntity =

SystemAPI

.GetSingletonEntity<CameraPivot>();

var job = new CameraPivotTranslationJob

{ TranslationLookup = translationLookup

, CharacterEntity = characterEntity

, PivotEntity = pivotEntity

};

state.Dependency =

job.Schedule(state.Dependency);

}

}

[BurstCompile]

partial struct CameraPivotTranslationJob : IJob

{

public ComponentLookup<Translation> TranslationLookup;

public Entity CharacterEntity;

public Entity PivotEntity;

public void Execute()

{

TranslationLookup[PivotEntity] =

TranslationLookup[CharacterEntity];

}

}

Ooh, what’s this, another type of job? This time I’m using an IJob to avoid holding up the main thread waiting for the dependencies of this system. (We can actually do the same thing in CameraControllerSystem. But next time.)

It works exactly as you’d hope: the camera moves with the doll. Currently the doll doesn’t turn at all, and we have no indication of when we can or can’t thrust, but still, this is a milestone. Here’s a video:

Although this is still rudimentary, I think I can already see some potential issues with the gameplay. The Newtonian impulse model means that, when attempting to reverse direction, you stop and end up moving in some unexpected tangential direction, and must thrust again to start moving in the direction you looked. This is counterintuitive.

One solution might be to quickly cancel the player’s current velocity before applying the impulse. Another might be to introduce friction, so that the player moves quickly during the thrust and then slows down. We’ll think about this more soon.

For now… if you’ve read to the end of this marathon devlog, thanks for following along! We should be able to really steam ahead now. So, in the immortal words of Ange Ushiromiya… <See you next time.>

Comments