Hello again welcome back to the devlog. OK let’s go.

Contents

Contents

FINAL ROUND vs generics

Back in devlog 4, we fought a battle to implement a generic IJobChunk. However, this ended up pretty horrible to actually use. For every version of the job we need to register a ComponentTypeHandle and generate an EntityQuery, and create an instance of the job with the appropriate parameters. There’s a lot of boilerplates. Ideally we could shove it all into a generic function, but we can’t do that because SystemAPI doesn’t support generics.

There must surely be a better way.

Ways that are not better

Currently the method I’m using to handle temporary states is to apply tag components on the player and have systems that depend on those tag components for various interactions. Tag components are appropriate for DOTS, but is it necessary to store the lifetime on the specific component? Could we instead have a component that represents a lifetime with a reference to the component that is to be removed? Would that even perhaps be more cache performant somehow?

The only problem with the naive version of this idea is that entities can only have one of a given component type. So if we had a component Status we could only have one status, which is no good. Making it a generic component would defeat the purpose of having a single component type to iterate over.

One solution might be to create a DynamicBuffer storing a list of lifetime components. This is essentially an array. It has a little bit of a performance cost compared to normal components but it might be worth considering if the rest of the idea looks good.

Basically the idea would be, in pseudocode…

for status in (statuses : DynamicBuffer<Status>)

if (Time - StartTime > Duration)

ecb.RemoveComponent<status.ComponentToRemove>

ecb.AddComponent<status.ComponentToAdd>

The more difficult issue is the idea of storing a specific component type. Is that even possible in Bursted code? My money is on probably not. But let’s try it…

using Unity.Entities;

using System;

partial struct Status : IComponentData

{

float StartTime;

float Duration;

Type ComponentToRemove;

}

Predictably,

ArgumentException: Status contains a field of System.Type, which is neither primitive nor blittable.

OK, here’s another idea: we can’t store references to components but we can store references to entities. What if instead of a component, we represent a status effect by its own entity? This might sound outlandish, but an entity is just an arbitrary association of some data. Making statuses essentially be their own archetype might have some advantages for iteration as well.

The problem with this idea is that it makes it more indirect to actually use those tag components. For example, our ThrustSystem looks for entities which have both a Thrust and a Velocity component. However, if the Thrust is on another entity entirely, this doesn’t work at all. Thrust could store a reference to the entity, but that’s a random access situation which is precisely what ECS is supposed to try to avoid and thus sounds like code smell.

So having considered these blind alleys, what we have is probably the best option.

Maybe there’s a way around the generic SystemAPI restriction though? SystemAPI is after all just codegen; all we have to do is unwrap the SystemAPI calls and do it ourselves.

Here’s the function we wrote that schedules a generic job…

[BurstCompile]

void scheduleJob<T>(ref SystemState state)

where T: unmanaged, IComponentData, IStatus

{

ComponentTypeHandle<T> statusHandle =

SystemAPI

.GetComponentTypeHandle<T>(false);

EntityTypeHandle entityHandle =

SystemAPI

.GetEntityTypeHandle();

float deltaTime =

SystemAPI

.Time

.DeltaTime;

EntityQuery statusQuery =

SystemAPI

.QueryBuilder()

.WithAll<T>()

.Build();

var ecbSystem =

SystemAPI

.GetSingleton<EndSimulationEntityCommandBufferSystem.Singleton>();

var job = new StatusCountdownJob<T>

{ DeltaTime = deltaTime

, ECB =

ecbSystem

.CreateCommandBuffer(state.WorldUnmanaged)

.AsParallelWriter()

, StatusHandle = statusHandle

, EntityHandle = entityHandle

};

state.Dependency =

job

.ScheduleParallel

( statusQuery

, state.Dependency

);

}

There are two generic calls to SystemAPI, which generate a ComponentTypeHandle<T> and an EntityQuery. The generated code will create these in OnCreate and then Update the ComponentTypeHandle before it is used. Here for example is what the generated code for StatusTickSystem’s OnCreate function looks like…

Unity.Entities.EntityQuery __query_1988671708_0;

Unity.Entities.EntityQuery __query_1988671708_1;

Unity.Entities.EntityQuery __query_1988671708_2;

Unity.Entities.EntityTypeHandle __Unity_Entities_Entity_TypeHandle;

Unity.Entities.ComponentTypeHandle<Thrust> __Thrust_RW_ComponentTypeHandle;

Unity.Entities.ComponentTypeHandle<ThrustCooldown> __ThrustCooldown_RW_ComponentTypeHandle;

public void OnCreateForCompiler(ref SystemState state)

{

var entityQueryBuilder = new Unity.Entities.EntityQueryBuilder(Unity.Collections.Allocator.Temp);

__query_1988671708_0 = entityQueryBuilder.WithAll<Thrust>().Build(ref state);

entityQueryBuilder.Reset();

__query_1988671708_1 = entityQueryBuilder.WithAll<ThrustCooldown>().Build(ref state);

entityQueryBuilder.Reset();

__query_1988671708_2 = state.GetEntityQuery(new Unity.Entities.EntityQueryDesc{All = new Unity.Entities.ComponentType[]{Unity.Entities.ComponentType.ReadOnly<Unity.Entities.EndSimulationEntityCommandBufferSystem.Singleton>()}, Any = new Unity.Entities.ComponentType[]{}, None = new Unity.Entities.ComponentType[]{}, Options = Unity.Entities.EntityQueryOptions.Default | Unity.Entities.EntityQueryOptions.IncludeSystems});

entityQueryBuilder.Dispose();

__Unity_Entities_Entity_TypeHandle = state.GetEntityTypeHandle();

__Thrust_RW_ComponentTypeHandle = state.GetComponentTypeHandle<Thrust>(false);

__ThrustCooldown_RW_ComponentTypeHandle = state.GetComponentTypeHandle<ThrustCooldown>(false);

}

So, what is this EntityQueryBuilder function? Let’s take a look at it. The documentation is here. It’s essentially the same as tye SystemAPI version, but it uses the same querybuilder multiple times, calling Reset() in between.

So we need to store these two objects somehow. We can create a struct for that! Let’s call it StatusJobFields or something.

struct StatusJobFields<T>

where T: unmanaged, IComponentData, IStatus

{

ComponentTypeHandle<T> Handle;

EntityQuery Query;

}

Now a generic function to create these.

[BurstCompile]

StatusJobFields<T> getStatusFields<T>(ref SystemState state, EntityQueryBuilder queryBuilder)

where T: unmanaged, IComponentData, IStatus

{

EntityQuery query =

queryBuilder

.WithAll<T>()

.Build(ref state);

queryBuilder.Reset();

return new StatusJobFields<T>

{ Handle = state.GetComponentTypeHandle<T>(false)

, Query = query

};

}

[BurstCompile]

void scheduleStatusJob<T>(ref SystemState state, StatusJobFields<T> fields)

where T: unmanaged, IComponentData, IStatus

{

fields.Handle.Update(ref state);

EntityTypeHandle entityHandle =

SystemAPI

.GetEntityTypeHandle();

float deltaTime =

SystemAPI

.Time

.DeltaTime;

var ecbSystem =

SystemAPI

.GetSingleton<EndSimulationEntityCommandBufferSystem.Singleton>();

var job = new StatusCountdownJob<T>

{ DeltaTime = deltaTime

, ECB =

ecbSystem

.CreateCommandBuffer(state.WorldUnmanaged)

.AsParallelWriter()

, StatusHandle = fields.Handle

, EntityHandle = entityHandle

};

state.Dependency =

job

.ScheduleParallel

( fields.Query

, state.Dependency

);

}

This compiles! Hooray!

Now we can drastically simplify the earlier part of the system.

using Unity.Entities;

using Unity.Burst;

using Unity.Jobs;

using Unity.Collections;

[BurstCompile]

[UpdateInGroup(typeof(LevelSystemGroup))]

partial struct StatusTickSystem : ISystem

{

StatusJobFields<Thrust> thrustFields;

StatusJobFields<ThrustCooldown> thrustCooldownFields;

[BurstCompile]

public void OnCreate(ref SystemState state)

{

var queryBuilder =

new EntityQueryBuilder(Allocator.Temp);

thrustFields =

getStatusFields<Thrust>(ref state, queryBuilder);

thrustCooldownFields =

getStatusFields<ThrustCooldown>(ref state, queryBuilder);

}

[BurstCompile]

public void OnDestroy(ref SystemState state)

{

}

[BurstCompile]

public void OnUpdate(ref SystemState state)

{

scheduleStatusJob<Thrust>(ref state, thrustFields);

scheduleStatusJob<ThrustCooldown>(ref state, thrustCooldownFields);

}

// functions discussed above

}

Much, much, much nicer. It’s a bit of a pain to have to create the fields in OnCreate, and it would be nice to be able to have my own codegen, but until we get generics in SystemAPI, this will do well enough.

When we run this, we encounter a problem…

InvalidOperationException: System.InvalidOperationException: Reflection data was not set up by an

Initialize()call. For generic job types, please include[assembly: RegisterGenericJobType(typeof(MyJob<MyJobSpecialization>))]in your source file.

I forgot about this, but Burst needs to be told explicitly if you’re going to be using generic jobs under certain circumstances. Frustratingly in the time since I updated this devlog, Unity released a new version of the Entities package, and they seem to have removed the documentation for Entities 1.0-pre.15, and the documentation for Entities 1.0-pre.44 does not have documentation for generic jobs anymore. Thanks a bunch, Unity! Luckily the documentation still exists for an older version.

The problem is that we are creating jobs in a method that isn’t one of the ones that is part of the ISystem interface, but Burst only knows to compile concrete specialisations if they’re used in OnCreate, OnUpdate etc. The documentation says we should be able to get around this by returning the job from the function in OnUpdate. Theoretically We don’t actually have to do anything with it. So just change the type signature of scheduleStatusJob to StatusCountdownJob<T> and put return job at the end and Unity will know it has to compile these jobs. However, I tried this and it didn’t work.

Which means yet more boilerplate. We have to put

[assembly: RegisterGenericJobType(typeof(StatusCountdownJob<Thrust>))]

[assembly: RegisterGenericJobType(typeof(StatusCountdownJob<ThrustCooldown>))]

at the beginning of the file right after the using declarations, and the same for any further jobs we might want to add.

Not counting down

There is another potential improvement. Currently I am subtracting the delta-time from the remaining life of a status on every frame and writing it back to that status. It’s better to access things read-only, and we’ve just seen that repeated floating point operations can compound into big problems. It’s not likely to be a serious problem, but I can still change IStatus to store a creation time rather than a time remaining, and subtract that from the current time to get the time remaining. Same number of subtractions, less writing to memory.

Premature optimisation? Yeah, definitely. But it might help mitigate job dependency problems in the future if the status jobs are not fighting to write to the same component as other jobs.

The main impact of this is that I have to pass the Time.ElapsedTime in a couple of places like ThrustStartSystem, and I have to replace all instances where I’m using the elapsed time with double precision floats. I don’t think this will have a big cost.

Chaining states together

Why go to all this effort to fiddle with the status countdowns? Well, I want to be able to chain states together. When the player clicks to initiate a thrust, I want to drastically increase drag, rotate the doll to the right direction, and then I want to put the damping back down and initiate the acceleration.

In order to do this, I need code to handle going from one state to another after a specified time. We can adapt the StatusCountdownJob for this purpose, by adding an extra step where you add a second component type.

That will work great if the other component is a tag component, but what if it’s got some data associated with it? We could create another interface that is something like ‘chainjob’, which has a field for another component inside it, and essentially nest components, but this could get messy if we’ve got a long chain.

So let’s just have it be ‘tag components only’. Instead of implementing IStatus on Thrust we can apply it to something like ThrustWindup, then ThrustActive, then maybe if we have need for it ThrustAfter.

Since the actual Thrust component isn’t going to be what controls whether thrust is applied, we don’t actually have to add or remove it. We can just permanently have it on the doll, and update its value as necessary. We can use its information in all stages of the thrust calculation.

There is one other complication. The durations of the various components will need to be known by the system the schedules each job to transition between stages in the chain. But perhaps we could see a simplification here. There is no need to store the duration on every single instance of a status component. Instead this should be known to the system, and passed to the job.

using Unity.Assertions;

using Unity.Entities;

using Unity.Burst;

using Unity.Burst.Intrinsics;

using Unity.Collections;

[BurstCompile]

public partial struct StatusChainJob<T, U> : IJobChunk

where T : unmanaged, IComponentData, IStatus

where U : unmanaged, IComponentData, IStatus

{

public double Time;

public double Duration;

public EntityCommandBuffer.ParallelWriter ECB;

[ReadOnly] public ComponentTypeHandle<T> StatusHandle;

public EntityTypeHandle EntityHandle;

public void Execute

( in ArchetypeChunk chunk

, int unfilteredChunkIndex

, bool useEnabledMask

, in v128 chunkEnabledMask

)

{

//not usable with enableable components

Assert.IsFalse(useEnabledMask);

NativeArray<T> statuses =

chunk

.GetNativeArray<T>

( ref StatusHandle

);

NativeArray<Entity> entities =

chunk

.GetNativeArray

( EntityHandle

);

U nextComponent = new U;

nextComponent.TimeCreated = Time;

for (int i = 0; i < chunk.Count; ++i)

{

T status = statuses[i];

double timeElapsed = Time - status.TimeCreated;

if (timeElapsed > Duration) {

ECB.RemoveComponent<T>

( unfilteredChunkIndex

, entities[i]

);

ECB.AddComponent<U>

( unfilteredChunkIndex

, entities[i]

, nextComponent

);

}

}

}

}

StatusTickSystem, which I’m renaming StatusTransitionSystem, can read the durations of the various types of status from a configuration struct. We can use Level for this. I had a small issue where trying to access Level with GetSingleton was running before the Level was actually baked, but that was solved by implementing IStartStopSystem and moving the initialisation functions into OnStartRunning.

partial struct StatusTransitionSystem : ISystem, ISystemStartStop

{

StatusJobFields<Thrust> thrustFields;

StatusJobFields<ThrustCooldown> thrustCooldownFields;

[BurstCompile]

public void OnStartRunning(ref SystemState state)

{

var queryBuilder = new EntityQueryBuilder(Allocator.Temp);

Level levelSettings = SystemAPI.GetSingleton<Level>();

thrustFields = getStatusFields<Thrust>(ref state, queryBuilder, levelSettings.ThrustDuration);

thrustCooldownFields = getStatusFields<ThrustCooldown>(ref state, queryBuilder, levelSettings.ThrustCooldown);

}

// other stuff

}

At this point I think we’re finally ready to actually create the logic I want. I am coming to think generics were a huge mistake and I should have just accepted some repeated code.

Sphere interpolation

OK, so, let’s first handle the ‘before thrust’ code. We’re going to be deleting the rotation spring and creating a new means for rotation.

The slerp function takes a starting rotation and a final orientation. The start rotation is the current orientation of the doll when the command to accelerate is received. The target rotation is the rotation of the camera at this time. We store both on the ThrustWindup component in the ThrustStartSystem.

Here’s a super basic implementation of the rotation logic:

partial struct ThrustAlignmentJob : IJobEntity

{

public double Time;

public float Duration;

void Execute(in Thrust thrust, in ThrustWindup windup, ref Rotation rotation)

{

float amount =

math

.smoothstep

( 0f

, 1f

, (float)(Time - windup.TimeCreated) / Duration

);

rotation.Value =

math

.slerp

( windup.InitialRotation

, windup.TargetRotation

, amount

);

}

}

The Duration is read out from the Level singleton.

We also rewrite the StatusTransitionSystem:

StatusJobFields<ThrustWindup> thrustWindupFields;

StatusJobFields<ThrustActive> thrustActiveFields;

StatusJobFields<ThrustCooldown> thrustCooldownFields;

[BurstCompile]

public void OnStartRunning(ref SystemState state)

{

var queryBuilder = new EntityQueryBuilder(Allocator.Temp);

Level levelSettings = SystemAPI.GetSingleton<Level>();

thrustWindupFields = getStatusFields<ThrustWindup>(ref state, queryBuilder, levelSettings.ThrustWindup);

thrustActiveFields = getStatusFields<ThrustActive>(ref state, queryBuilder, levelSettings.ThrustDuration);

thrustCooldownFields = getStatusFields<ThrustCooldown>(ref state, queryBuilder, levelSettings.ThrustCooldown);

}

[BurstCompile]

public void OnUpdate(ref SystemState state)

{

scheduleChainJob<ThrustWindup, ThrustActive>(ref state, thrustWindupFields);

scheduleEndJob<ThrustActive>(ref state, thrustActiveFields);

scheduleEndJob<ThrustCooldown>(ref state, thrustCooldownFields);

}

and apply [WithAll(typeof(ThrustActive))] to ThrustAccelerationSystem.

However, even with smoothstep, this feels a little mechanical. A little anticipation might help. Let’s create a cubic Bézier curve. That must be supported in the Mathematics library, right…..?

lol nope we’re going to have to write it ourself.

The Bézier rabbit hole

We’ll basically copy the idea of the CSS Cubic Bézier. That is defined in parametric form by

\[\mathbf{B}(t)=(1-t)^3\mathbf{P}_0+3(1-t)^2t\mathbf{P}_1+3(1-t)t^2\mathbf{P}_2+t^3\mathbf{P}_3\]In the case of an easing function, \(\mathbf{P}_0\) is \((0,0)\) and \(\mathbf{P}_3\) is \((1,1)\). However, we don’t want an \((x,y)\) point as a function of \(t\), but \(y\) as a function of \(x\)! Unfortunately a quick search wasn’t able to find an explicit formula for this quantity. Perhaps we can derive it ourself? We have…

\[\begin{align*} B_x(t)&=3(1-t)^2t P_{1x} + 3(1-t)t^2 P_{2x} + t^3 \\ B_y(t)&=3(1-t)^2t P_{1y} + 3(1-t)t^2 P_{2y} + t^3 \end{align*}\]We need to solve get \(t\) as a function of \(B_x\) and the \(P_{ix}\), and then substitute it into \(B_y\). First of all we should expand all the terms to get a plain cubic:

\[(1-3P_{2x}+3P_{1x})t^3+3(P_{2x}-2P_{1x})t^2 + 3P_{1x}t-B_x=0\]Solving this cubic is a bit fiddly. Let’s write it in a cleaner form:

\[\begin{align*} 0&=at^3+bt^2+ct+d\\ a &= 1-3P_{2x}+3P_{1x}\\ b &= 3(P_{2x} - 2P_{1x})\\ c &= 3P_{1x}\\ d &= -B_x \end{align*}\]My initial approach was to convert to the depressed cubic and apply Cardano’s formula. Unfortunately, we can’t assume this cubic has only one root. In general it might have one or three real roots, and we’re only interested in the root between 0 and 1. Once I realised this, I considered some other, more painful methods. Here’s that whole sordid blind alley:

The quest to solve a cubic quickly

First we need to convert it to a depressed cubic, with the change of variable \(u=t-\frac{b}{3a}\). With this change of variable, we get

\[\begin{align*} 0&=u^3+pu+q\\ p &= \frac{3ac-b^2}{3a^2}\\ q &= \frac{2b^3 - 9abc + 27a^2d}{27a^3} \end{align*}\]Now the solutions of the cubic can be found. In this case we expect there to be just one real root, which lets us use . This requires that

\[\Delta = \frac{q^2}{4}+\frac{p^3}{27}>0\]and in that case the real root is

\[u_0=\sqrt[3]{-\frac{q}{2}+\sqrt{\Delta}}+\sqrt[3]{-\frac{q}{2}-\sqrt{\Delta}}\]so the solution to the original cubic

\[t_0=u_0-\frac{b}{3a}\]In C#, you’re not allowed non-member functions. So I can’t just write this as a function in some namespace, I have to attach it to something. In this case I guess I will just define a struct called Util and put it in a namespace. Here we go then:

using Unity.Mathematics;

using Unity.Assertions;

namespace ThrustDoll

{

public static class Util

{

public static float CubicBezierEase(float p1x, float p1y, float p2x, float p2y, float x)

{

float a = 1 - 3 * p2x + 3 * p1x;

float b = 3 * (p2x - 2 * p1x);

float c = 3 * p1x;

float d = x;

float p = (3*a*c - b*b) / (3*a*a);

float q = (2*b*b*b - 9*a*b*c + 27*a*a*d) / (27*a*a*a);

float delta = q*q + (p*p*p/27);

Assert.IsTrue( delta > 0);

float t = math.pow(-q/2 + math.sqrt(delta), 1/3) + math.pow(-q/2 - math.sqrt(delta), 1/3) - b/(3*a);

return (1 - 3*p2y + 3*p1y)*t*t*t + 3*(p2x - 2*p1x)*t*t + 3*p1x*t;

}

}

}

This (assuming no mistakes) computes Cardano’s formula for the real root as discussed above. There is an assertion to guard against the case where we’d have to do complex number calculations.

Unfortunately, this failed miserably. Investigation in Desmos suggested the baseline assumption, that this is an equation with one real root, is invalid. We could try using the general cubic formula, but this requires complex numbers; or the formula with trigonometric functions, but we’ve made things very complicated for what’s supposed to be a trivial operation.

The approach used by the Gnu Scientific Library is to find the eigenvalues of the companion matrix. Unfortunately we don’t have a convenient way to compute eigenvalues within Unity. That said if we can find a simple expression for the solution of our particular cubic by this method, we could hardcode it in. But… this sounds hilariously complicated if you’re not a computer, so let’s not do that.

Although I don’t presently have a Mathematica license, we may be able to get an answer out of Wolfram Alpha. I tried this and the results were, uh, horrible. Otherwise, we can find an approximate solution with Newton-Raphson iteration, which is the approach taken here in a Javascript implementation of Bézier easing.

…so yeah, I’m overcomplicating this! Seriously overcomplicating this!

We don’t necessarily have to compute the fully general case of a cubic Bézier for the effect I want. We could take the case where

\[\begin{align*} P_{1x}&=\frac{1}{3}\\ P_{2x}&=\frac{2}{3} \end{align*}\]which causes the x component of the Bézier to reduce to \(B_x(t)=t\). Then we can evaluate the \(y\) component very easily. In testing, the resulting easing function is decent: not quite as freely defined as a true Bézier but close enough for our purpose.

Here’s the revised computation:

namespace ThrustDoll

{

public static class Util

{

public static float BezierComponent(float p1y, float p2y, float t)

{

float a = 1 - 3 * p2y + 3 * p1y;

float b = 3 * p2y - 6 * p1y;

float c = 3 * p1y;

return a*t*t*t + b*t*t + c*t;

}

}

}

This can be used for both cubic easing in this specific limited case, and also generally for calculating parametric Bézier curves. And it’s dead simple, with barely any more calculations than smoothstep.

But having implemented this… it doesn’t feel very good! The anticipation happens too fast to really make sense, and if I make the windup too long, it’s going to feel highly unresponsive. It also feels very mechanical, perhaps even more so than smoothstep did.

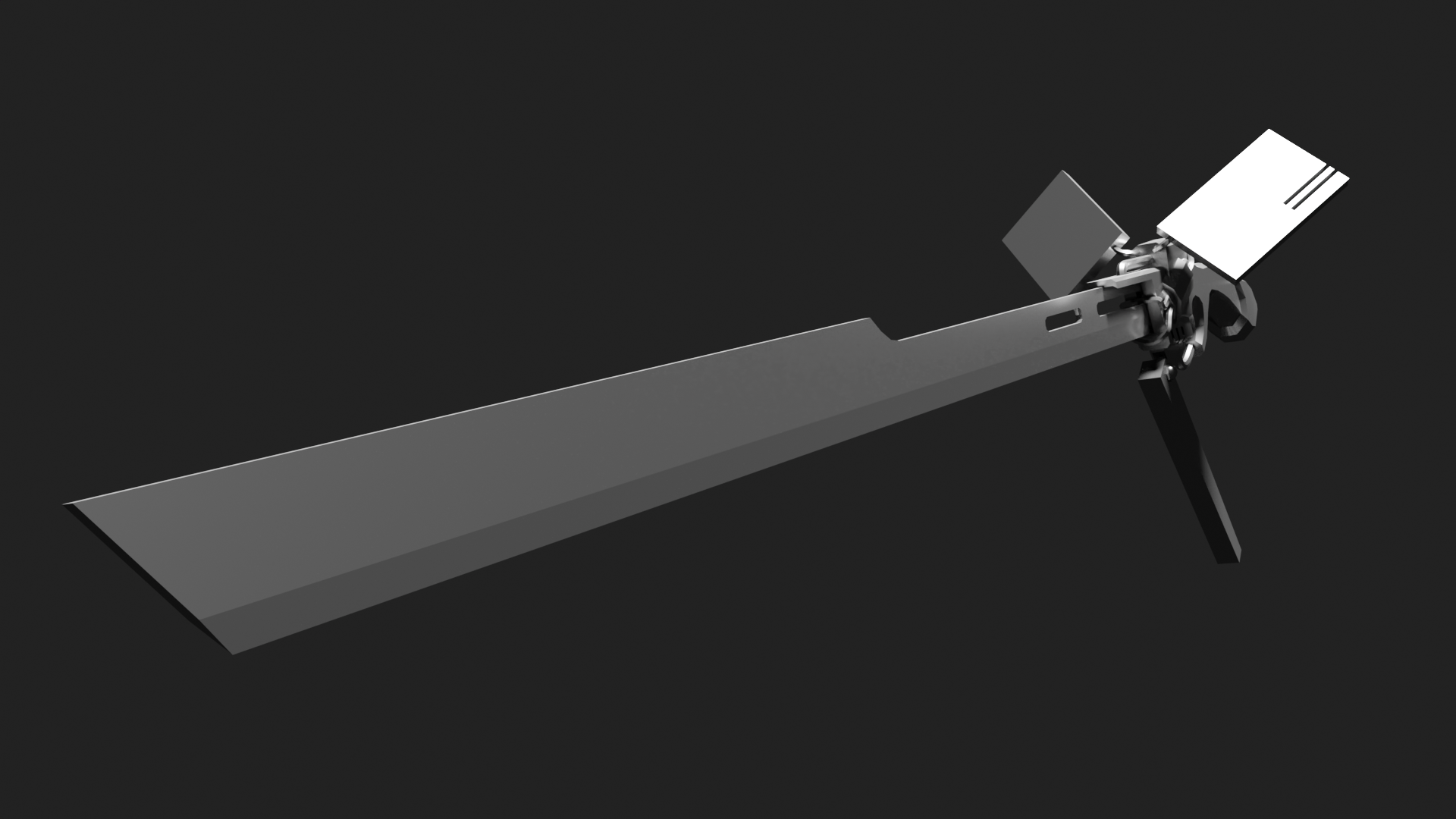

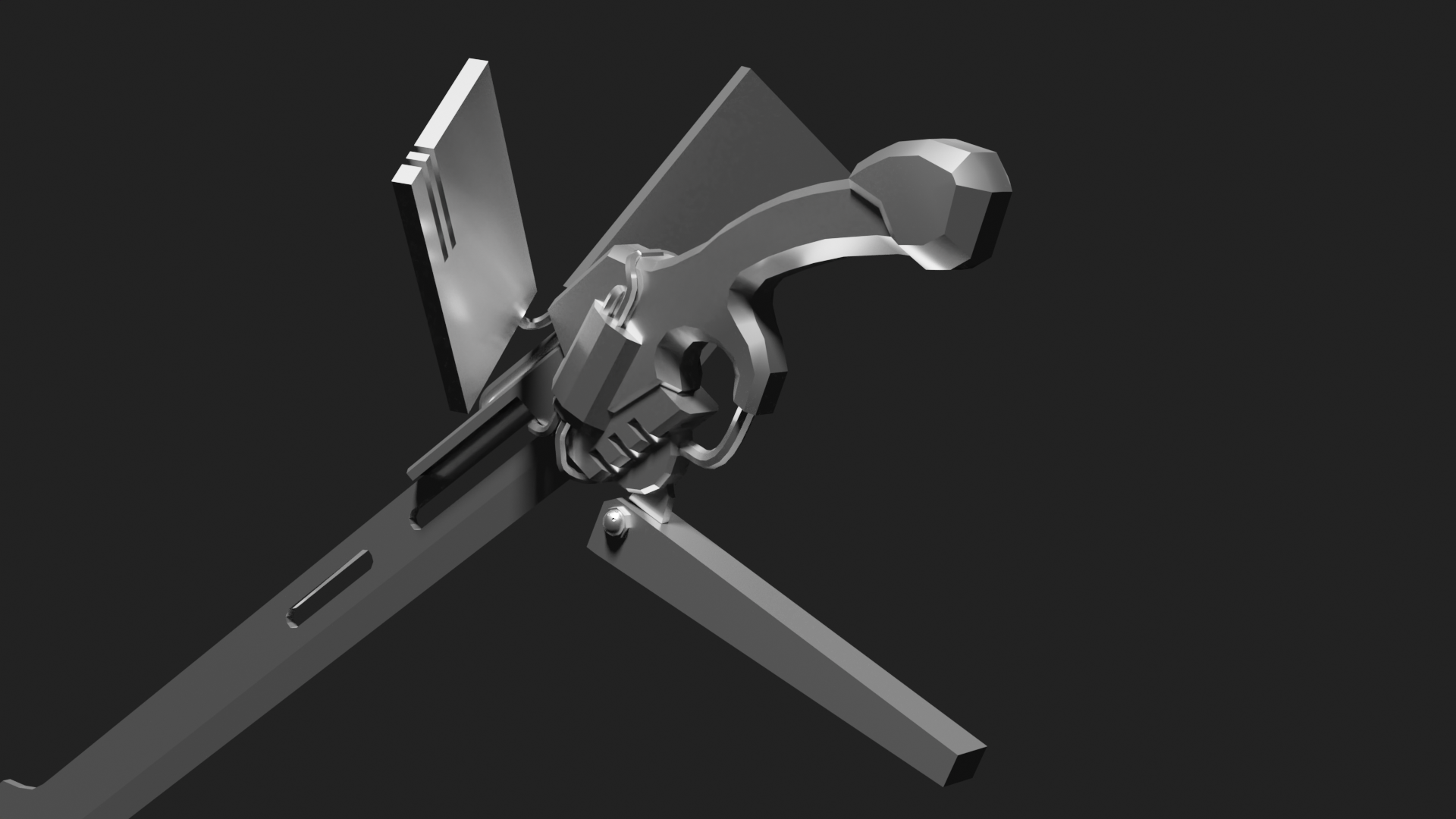

Making a sword

At the end of February I got quite ill and I wasn’t able to do much work on the game. Instead, I put some time into modelling. The major new addition is this sword…

The design of the sword is inspired primarily by the Type-4O Sword in NieR Automata and various designs from the manga Blame by Tsutomu Nihei. The latter inspired the blocky cuboid components. Other influences are gunblades from the Final Fantasy franchise.

Like the doll, the main technique used was straight-up polymodelling, but I also used Bezièr curves for the various wires and tubes. The Auto Smooth setting is used to create a mix of hard and soft edges.

A proper animation and movement system

We can only get so far without actually doing some skeletal animation.

Here’s an idea for a better motion.

- if the motion involves only a small change of angle, i.e. within a narrow cone of say 30-45 degrees (to be fine tuned), we just smoothstep the rotation. The legs can twist a little to give feedback. This will happen fast.

- if the motion involves a larger change of angle, the doll will flare out, then turn into the desired direction. In this case, we increase the damping during the windup step. This will take longer.

- if the turn is primarily to the left or right, she will twist her torso and hold this pose until she’s aligned

- if the pose is primarily upwards, she will arch her back upwards.

- if it’s primarily downwards, she will do a somersault.

- after turning, she should bunch up her legs before thrusting as an anticipation pose.

The motion will be a combination of Blender-authored animation and programmatic animation. This means we need to start dealing with the question of animation blending!

…or we would. I created some animations in Blender, but once I started testing them with Optimised Skeletons, I ran into some unforeseen bugs in Latios Framework.

Let’s go through it in detail.

Making some animations in Blender

So. Just about any property in Blender can be animated on the timeline. The main ones we animate are of course transform properties (position, rotation etc.), but we can equally animate rendering properties, modifier properties, basically anything with a number can have keyframes.

These keyframes are interpolated—by default using Bezièr curves, but you can use a variety of easing functions. And this will update any children, skinned meshes, etc. that depend on that property. The Rigify rig uses a combination of parenting, constraints and ‘drivers’—short python scripts which compute the value for a property—to animate its skeleton with all its advanced features like FK/IK switching, updating the UI, etc. etc.

Obviously none of that works in Unity. Unity only takes pure FK rigs. Luckily, the Blender FBX exporter has a toggle to ‘bake animations’, and also to filter out non-deform bones, so all we need to do is make an animation in Blender and export it and we’ll have all the benefits of IK etc. in authoring. That’s great as long as we don’t need to procedurally mess with our animation, implement dynamic IK etc., but that’s a bridge for another day.

In Blender, animation data is stored on a data structure called an ‘Action’. You can edit one Action at a time for an object, and you can mark other Actions to not be deleted. All applicable Actions will be exported, and presented as animation clips in Unity. So a pretty convenient system.

To test this system, I made a couple of basic animations in Blender. We have a ‘straight flight’ animation that just loops with the feet gently moving around…

And we have a ‘rotate up small’ animation which moves in a way to suggest the doll is twisting to face slightly upwards.

Here I’ve included ‘root motion’ (changing the transform of the object as a whole) for context, but for importing into Unity I remove the root motion so it’s just a small arm/leg movement. The point is to blend it with the flight animation before and after, and thus learn about how Kinemation handles the problem of animation blending.

However…

The baking wars

I wanted to use optimised meshes, both for performance and because they provide ready support for animation blending. Dreaming also told me that in the upcoming version 0.7 of Latios, it will be most practical to implement IK on optimised skeletons. So lots of reasons to get ready to handle optimised skeletons now.

My model is a bit complex, with a variety of both skinned meshes (the doll herself, the grappling hook launcher on her wrist, the spool in her shoulder, the line running from the grappling hook to the spool, the flexible attachment between the jet engine and her back) and rigid meshes (the jet engine, the sword, the grappling hook itself, the pulley at the elbow and a new part I added over the groin). In some cases, I have parent chains of rigid components which I didn’t bother to merge for modelling convenience (e.g. when I modelled with modifiers).

When you import an armature into Unity, it creates a hierarchy of parented GameObjects which correspond to all the bones of the original skeleton, and the rigid objects are parented to this armature. When you click the toggle to generate an optimised mesh, it does some behind-the-scenes stuff to store this armature in a special data structure, and creates a completely flat hierarchy of GameObjects representing either exposed transforms or stuff that has models. Unity’s Animator component has access to this special hidden animation system.

With DOTS, you don’t get any of that, so Dreaming had to invent a way to extract this data and make his own version of the system, using ACL for compression. The actual process of baking the GameObjects into a DOTS equivalent is kind of hilariously complicated—more on that in a moment.

So, I imported my FBX model, applied the usual settings changes, and verified that all seemed to work. Then, I tried clicking the Optimise GameObjects button to see what might happen…

That’s not supposed to be there!

Further investigation revealed what was going on. The various rigid components were supposed to be baked as Exported Bones, which would track a particular bone in the optimised hierarchy. However, only whichever item was first on the list would get baked, and the bone it would track would almost always be the left hand… except in one situation where it was the right hand instead. Other objects would start off in the right place for the beginning of the animation, but they would not get animated.

Tracking down a baking bug

So what’s going on? In the course of debugging, Dreaming explained the whole system to me, and I will reproduce his explanation here since it’s fascinating to know how things work…

How Kinemation bakes optimised skeletons

Rather than play remote debugging, I’m going to explain how optimized skeleton baking works, the challenges it is dealing with, and the bugs I have had in the past with it. This bug is very similar to a bug I thought I fixed in 0.6.4 but apparently not. It will help to look at the actual Kinemation source code while reading through this explanation.

When Unity imports a hierarchy with optimizations, it takes all exported transforms and reparents them to the root animator GameObject. Internally, it has its own representation of the hierarchy, but user code cannot see it. In order for Kinemation to bake the optimized hierarchy, it has to jump through some hoops.

Inside the skeleton baker (which runs for every Animator), if Kinemation detects the hierarchy is optimized, it scans the immediate children (only the first level, no grandchildren). If the child has a SkinnedMeshRenderer or Animator, it is skipped over. Otherwise, it is added to two dynamic buffers ExportedBoneGameObjectRef and OptimizedSkeletonExportedBone. The child is also marked as TransformUsageFlags.ManualOverride. Lastly, the skeleton creates a request for something called a “shadow hierarchy” to be built.

Next, in a baking system, the shadow hierarchies are built. Multiple bakers may request the same shadow hierarchy, so the same hierarchy may be shared for the skeleton, mesh renderers, and animation clip sampling. The shadow hierarchy builder instantiates a copy of your Animator GameObject, then does some processing on it.

For an optimized hierarchy, it scans the first level children of these clones, and just like before, checks if they have SkinnedMeshRenderers or Animators of their own. In the case of a SkinnedMeshRenderer, it adds a component to that shadow child to track the skinned mesh. In the case of an Animator, it adds the shadow child to a destroy queue. The other children are assumed to be exported bones, and the builder tags them with a component that references the original authoring child the shadow child is a clone of (this cross-referencing is the reason for the destroy queue as destroying them immediately would desync the hierarchies).

Then, the builder iterates through the shadow children and queues up any of their children for destruction if they do not have a skinned mesh in their ancestry, and tags the skinned meshes it discovers. After that, the builder, now having made all the cross-references it cares about, destroys all the GameObjects in the destroy queue, reducing the hierarchy to the root, exported bones, and transforms that have skinned mesh descendants, with all exported bones being tagged with a ShadowCloneTracker, and all GameObjects that have a skinned mesh either directly or as a descendant having a ShadowCloneSkinnedMeshTracker. Lastly for this build step, the builder calls DeoptimizeTransformHierarchy on the hierarchy, which will expand out the hierarchy with all the hidden transforms.

Next, a baking system captures the binding paths for skinned meshes, as the shadow hierarchy makes this information visible. I won’t go into details, since that is mostly unrelated.

After that, another baking system goes through the shadow hierarchy and destroys all the GameObjects with the ShadowcloneSkinnedMeshTracker. What remains is the actual hierarchy the optimized skeleton will be built on top of. An optimized skeleton is generated by iterating over this remaining shadow hierarchy in breadth-first order. In the code, I perform this iteration using a Queue.

The next baking system computes the initial OptimizedBoneToRoot buffer for the skeleton. This buffer gets overwritten by animation at runtime, so you may not care about it. But the code is very straightforward. I actually have two different versions of this baking system, with one commented out. They typically generate the same result, but the one in place now is more efficient and robust.

Next, the skeleton binding paths are built. This will be important when I discuss debugging later. It is capturing the ancestry of each bone as a string ordered from bone to root, and the strings get ordered by their optimized skeleton bone index.

The next baking system, AssignExportedBoneIndicesSystem, is very important. It breadth-first iterates the shadow hierarchy for any transform with a ShadowCloneTracker. Whenever it finds one, it searches through the ExportedBoneGameObjectRef for a match, and assigns the OptimizedSkeletonExportedBone of the same index with the optimized skeleton index of the GameObject with the ShadowCloneTracker.

The next baking system builds out the parent hierarchy, computing all the parent indices. Not much to talk about here. It is pretty straightforward and outside of debugging, likely not related to the issues at hand.

The next three baking systems are related to skinned meshes and exposed skeletons, so don’t worry about those. But then we get to SkeletonClipSetSmartBlobberSystem, which is the thing that bakes animation clips by playing animations on the shadow hierarchy, copying all the bone transforms into buffers, and then sending them off to ACL to compress. After that comes two systems that read the mesh and skeleton paths and pack them into blob assets. And then a third system does the same thing with the already computed parent indices.

Now we get to the crux system that is likely causing all our problems, SetupExportedBonesSystem. I hate this system. The first job, ClearJob, nulls out BoneOwningSkeletonReference of all previously existing exported bones from incremental baking. Exported bones that have null skeleton should be undone of their exported bone status.

The next two jobs count the exported bones and resize a parallel HashSet.

Then we get to ApplySkeletonsToBonesJob. The first thing it does is check if any exported bone is referencing the root as its index in the optimized hierarchy as was computed in AssignExportedBoneIndicesSystem. In such cases, the bone likely isn’t an exported bone at all, but instead something misidentified. I give it my best shot of restoring its transform status to a normal child of the skeleton, and remove it from the exported bones list. I wrote this code while tracking down a bug that ended up being in AssignExportedBoneIndicesSystem prior to 0.6.4. Theoretically, this if block should never happen now that that other bug is fixed. I would encourage you to add a breakpoint or logging in this block.

The next block iterates through each exported bone again, and if the exported bone already has the required components, reinitializes the values for safety. Otherwise, it records a bunch of ECB commands to remove the default transforms and set the optimized transforms. Lastly, it adds each exported bone to the HashSet.

RemoveDisconnectedBonesJob looks for any entity with the exported bones from incremental baking that no longer have a skeleton reference (they never showed up in an OptimizedSkeletonExportedBone dynamic buffer). It then removes their remaining exported bone components.

Lastly, and also a really ugly part of this system is the ReChildExportedBonesJob. You see, when you specify TransformUsageFlags.ManualOverride, Unity’s built-in baking systems unparent all the children for that entity. I run a job that checks every single entity with a parent and check if they were reparented away from an exported bone in the hashset, and correct their parent value. In 0.7, this won’t be necessary because exported bones won’t require any special architype (it is a vectors vs matrices issue).

So lastly, we can get into debugging this. Aside from the breakpoint tip I mentioned earlier, your most powerful tool will be looking at the inspector for SkeletonBindingPathsBlobReference. It looks like this:

OptimizedSkeletonHierarchyBlobReference also has a custom inspector that lets you look at the parent indices. So now you can check which transforms made it into the final optimized skeleton, and what their hierarchy looks like. You can cross-reference these with the bone index of a CopyLocalToParentFromBone component, and see if the names match up. If they don’t, that’s something to investigate. And if there’s a bone in the optimized skeleton, but its exported counterpart with the same name is missing the CopyLocalToParentFromBone, then you can investigate that too. The best way to debug baking issues is first be starting with a closed subscene, and then tracing what happens when the subscene is opened, as this does a full rebake in-process.

This is a lot of information, but hopefully provides enough insight for you and/or anyone else to track down these mysterious behaviors. It is the best I can do short of a repro project.

I have to say every time I’ve had a problem using Latios, Dreaming has consistently been incredibly helpful and thorough, even while busily developing new features for the framework. I’m once again extremely grateful to the help, and glad I can contribute to debugging it.

So, having been given a roadmap, let’s see if we can figure out what’s happening here.

Let’s start with the suggestion to the block in SetupExportedBonesSystem’s ApplySkeletonsToBonesJob that is supposed to never run. (To modify Latios code, or any other third-party package, we need to move it from the Library/PackageCache folder to the Packages folder. Then alt tab back into Unity and wait for the progress bar to finish rebuilding everything.)

The block in question starts on line 138 of SetupExportedBonesSystem. Since I’m not currently using an IDE integrated with Unity’s debugger, I’ll just add a Debug.Log statement in this block. Sure enough, the message I wrote appears in the console! 10 times, corresponding to 11 exported transforms, minus the one that gets baked.

So we have a lead. For some reason, a lot of our exported bones have been incorrectly assigned the wrong BoneIndex, zero. Then once they get into the SetupExportedBonesSystem it thinks they’re not really exported bones and helpfully ‘fixes’ them to act as normal entities which don’t know anything about skeletons.

That means the problem probably happens somewhere earlier. Let’s next take a look at AssignExportedBoneIndicesSystem, since assigning exported bone indices is what isn’t happening…

Here’s that system since it’s pretty short:

AssignExportedBoneIndicesSystem

using System.Collections.Generic;

using Unity.Collections;

using Unity.Collections.LowLevel.Unsafe;

using Unity.Entities;

using Unity.Entities.Exposed;

namespace Latios.Kinemation.Authoring.Systems

{

[RequireMatchingQueriesForUpdate]

[DisableAutoCreation]

public partial class AssignExportedBoneIndicesSystem : SystemBase

{

Queue<UnityEngine.Transform> m_breadthQueue;

protected override void OnUpdate()

{

if (m_breadthQueue == null)

m_breadthQueue = new Queue<UnityEngine.Transform>();

Entities.ForEach((ref DynamicBuffer<OptimizedSkeletonExportedBone> exportedBones, in DynamicBuffer<ExportedBoneGameObjectRef> gameObjectRefs,

in ShadowHierarchyReference shadowRef) =>

{

m_breadthQueue.Clear();

m_breadthQueue.Enqueue(shadowRef.shadowHierarchyRoot.Value.transform);

int currentIndex = 0;

while (m_breadthQueue.Count > 0)

{

var bone = m_breadthQueue.Dequeue();

var link = bone.GetComponent<HideThis.ShadowCloneTracker>();

if (link != null)

{

var id = link.source.gameObject.GetInstanceID();

int i = 0;

foreach (var go in gameObjectRefs)

{

if (go.authoringGameObjectForBone.GetInstanceID() == id)

{

exportedBones.ElementAt(i).boneIndex = currentIndex;

break;

}

}

i++;

}

currentIndex++;

for (int i = 0; i < bone.childCount; i++)

{

var child = bone.GetChild(i);

m_breadthQueue.Enqueue(child);

}

}

}).WithEntityQueryOptions(EntityQueryOptions.IncludeDisabledEntities | EntityQueryOptions.IncludePrefab).WithoutBurst().Run();

m_breadthQueue.Clear();

}

}

}

So this walks over the shadow bone hierarchy, and when it finds a bone which has a HideThis.ShadowCloneTracker, it takes the GameObject instance ID of the linked GameObject, and then searches all the GameObjects in the file for a matching ID, and finally in the exported bones dynamic buffer it writes the bone index to the boneIndex field on the OptimizedSkeletonExportedBone. Let’s put in some debug statements to see what it’s writing. I started with….

UnityEngine.Debug.LogFormat("currentIndex: {0}, gameObject: {1}", currentIndex, id);

The result is that eleven lines are written to the console with seemingly valid numbers for currentIndex (i.e. not zero). If we run down the list, they seem to match the the things we were expecting to be exported.

So this system seems to be good… or is it? There’s actually one other variable here that’s relevant. In the inner foreach loop, the variable i is updated to keep the index into exportedBones and gameObjectRefs the same. However, the i++ that increments this variable is actually outside the foreach block. So all the writing goes onto the first bone, the other bones aren’t touched, and the first bone ends up with the last bone’s index.

I let Dreaming know about this—apparently it comes from a dodgy Git merge while fixing a bug for 0.6.4. It should be fixed for real in Latios version 0.6.6.

Achievement unlocked: helped to debug an open source project.

Thanks to this investigation, I’ve learned a bit about how Latios Kinemation works, which should be useful down the line when we get to blending animations and writing IK systems. It’s also very interesting to peer under the hood at what a more complex baking system might look like.

Collisions

In parallel with figuring out this bug, I set about implementing collisions.

To find collisions in Latios Framework, we need to first create collision layers, then call FindPairs to do a broadphase collision check using approximate structures. This will basically find all the collisions that might happen, i.e. that aren’t ruled out by the Axis Aligned Bounding Boxes (AABBs) not overlapping at all. This will generate a series of pairs of collisions.

When you call FindPairs, you pass it a struct that implements IFindPairsProcessor. The struct in this interface has a method Execute which receives a pair of colliders; you can then use a function like DistanceBetween to find out if they actually collided.

I asked Dreaming for advice on creating a collision layer in an unmanaged ISystem, and he pointed me to an example in the Latios Space Shooter Sample, which I used as a template in the following.

So here’s a version for my project. I created a very basic tag component called Projectile, and wrote this:

using Unity.Entities;

using Unity.Burst;

using Latios;

using Latios.Psyshock;

using Unity.Collections;

[BurstCompile]

[UpdateInGroup(typeof(LevelSystemGroup))]

[UpdateAfter(typeof(VelocitySystem))]

partial struct ProjectileCollisionLayerSystem : ISystem, ISystemNewScene

{

BuildCollisionLayerTypeHandles _handles;

LatiosWorldUnmanaged _latiosWorld;

[BurstCompile]

public void OnCreate(ref SystemState state)

{

_handles = new BuildCollisionLayerTypeHandles(ref state);

_latiosWorld = state.GetLatiosWorldUnmanaged();

}

[BurstCompile]

public void OnDestroy(ref SystemState state)

{

}

public void OnNewScene(ref SystemState state)

{

_latiosWorld

.sceneBlackboardEntity

.AddOrSetCollectionComponentAndDisposeOld

( new ProjectileCollisionLayer()

);

}

[BurstCompile]

public void OnUpdate(ref SystemState state)

{

_handles.Update(ref state);

var settings = BuildCollisionLayerConfig.defaultSettings;

EntityQuery projectileQuery =

SystemAPI

.QueryBuilder()

.WithAll<Projectile, Collider>()

.Build();

state.Dependency =

Physics

.BuildCollisionLayer(projectileQuery, _handles)

.WithSettings(settings)

.ScheduleParallel

( out CollisionLayer projectileLayer

, Allocator.Persistent

, state.Dependency

);

var projectileLayerComponent =

new ProjectileCollisionLayer

{ Layer = projectileLayer

};

_latiosWorld

.sceneBlackboardEntity

.SetCollectionComponentAndDisposeOld

( projectileLayerComponent

);

}

}

This creates a job to build a collision layer, and updates something called a collection component. What is that? It’s another helpful feature of Latios Framework. It’s essentially a way to use data structures that wouldn’t normally be allowed in IComponentData structs as if they were components. Here’s the code…

using Unity.Entities;

using Unity.Jobs;

using Latios;

using Latios.Psyshock;

partial struct ProjectileCollisionLayer : ICollectionComponent

{

public CollisionLayer Layer;

public ComponentType AssociatedComponentType

=> ComponentType.ReadWrite<ProjectileCollisionLayerTag>();

public JobHandle TryDispose(JobHandle inputDeps)

=> Layer.IsCreated ? Layer.Dispose(inputDeps) : inputDeps;

}

partial struct ProjectileCollisionLayerTag : IComponentData

{

}

So a collection component is not an actual component, but each collection component has a companion tag component that is part of ECS. Latios keeps track of this component and, at the end of a frame, allocates or disposes the collection component if the matching tag gets added or deleted. But that’s only for normal entities! For the special ‘blackboard entities’ used as a special central data store in the Latios Framework, you can add or remove collection components directly with special methods.

Since a collision layer has some funky data structures, you can’t store it in a normal component and have to store it in a collection component. Better yet, it can be smart about interacting with the job system… I think? There is some automatic dependency management around the collection component, although I’m not entirely sure how it knows when BuildCollisionLayer is finished… Something to look into for next time.

I use an essentially identical job to build a CollisionLayer that contains just the player. This seems like it must have quite a bit of overhead… I don’t know, is there a better way when you only want to test one collider against a collision layer?

Having generated both our collision layers, we need a system to read them and call FindPairs. That looks like this:

using Unity.Entities;

using Unity.Burst;

using Unity.Collections;

using Latios;

using Latios.Psyshock;

using UnityEngine;

[BurstCompile]

[UpdateInGroup(typeof(LevelSystemGroup))]

[UpdateAfter(typeof(PlayerCollisionLayerSystem))]

[UpdateAfter(typeof(ProjectileCollisionLayerSystem))]

partial struct PlayerCollisionSystem : ISystem

{

LatiosWorldUnmanaged _latiosWorld;

[BurstCompile]

public void OnCreate(ref SystemState state)

{

_latiosWorld = state.GetLatiosWorldUnmanaged();

}

[BurstCompile]

public void OnDestroy(ref SystemState state)

{

}

//[BurstCompile]

public void OnUpdate(ref SystemState state)

{

var projectileCollisionLayer =

_latiosWorld

.sceneBlackboardEntity

.GetCollectionComponent<ProjectileCollisionLayer>(true)

.Layer;

var playerCollisionLayer =

_latiosWorld

.sceneBlackboardEntity

.GetCollectionComponent<PlayerCollisionLayer>(true)

.Layer;

var processor = new DamagePlayerProcessor

{

};

state.Dependency =

Latios.Psyshock.Physics

.FindPairs

( projectileCollisionLayer

, playerCollisionLayer

, processor

)

.ScheduleParallel(state.Dependency);

}

}

struct DamagePlayerProcessor : IFindPairsProcessor

{

public void Execute(in FindPairsResult result)

{

if

( Latios.Psyshock

.Physics

.DistanceBetween

( result.bodyA.collider

, result.bodyA.transform

, result.bodyB.collider

, result.bodyB.transform

, 0f

, out var hitData

)

)

{

Debug.Log("Player was hit by a bullet");

}

}

}

Right now we don’t do anything with our collision except record that it happened in the console.

Another problem came up in testing this, with Unity complaining about job dependencies. It turns out that Psyshock depends on some extra Latios system group features, and by creating a custom ComponentSystemGroup I had accidentally locked myself out of this. To fix this, I made LevelGroup inherit Latios’s SuperSystem, which required a small change to replace OnCreate (now sealed) with CreateSystems…

[UpdateBefore(typeof(TransformSystemGroup))]

public class LevelSystemGroup : SuperSystem

{

protected override void CreateSystems()

{

RequireForUpdate<Level>();

}

}

With this done, the above systems would run. Now to test it: I created a sphere with a sphere collider and applied the Projectile tag component. If I fly the character through the bullet, we see the expected “Player was hit by a bullet”. Hooray!

I still need to implement collisions with the level walls, and actually do something with these collisions (stick to or bounce off walls with appropriate animations, die and respawn when hit with appropriate animations), not to mention create enemies to spawn lots of bullets, and visual effects, and so on and so forth—but we can at least detect collisions now. That’s a good start.

Comments